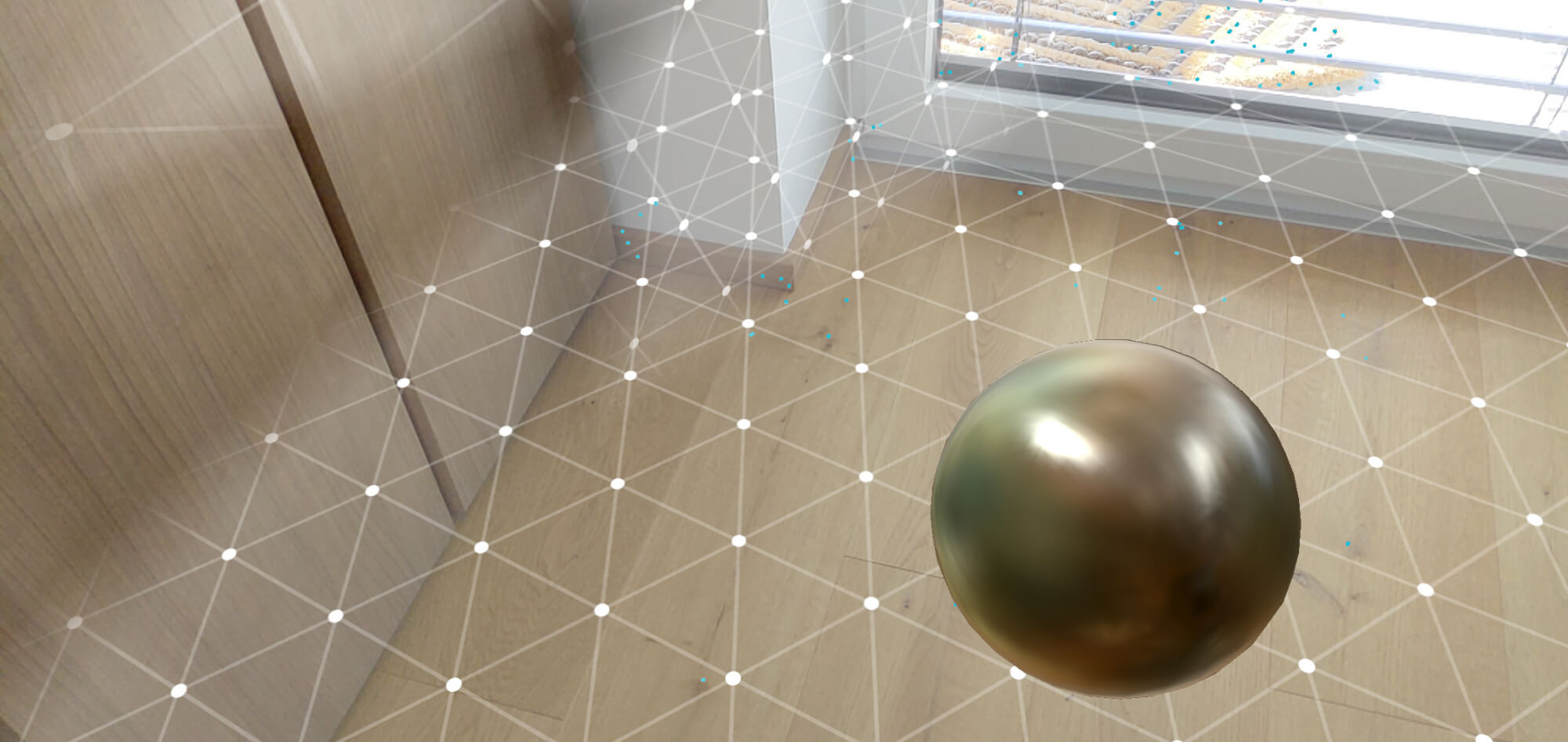

Realistically merging virtual objects with the real world in Augmented Reality has a few challenges. The most important:

- Realistic positioning, scale and rotation

- Lighting and shadows that match the real-world illumination

- Occlusion with real-world objects

The first is working very well in today’s AR systems. Number 3 for occlusion is working OK on the Microsoft HoloLens; and it’s soon also coming to ARCore (a private preview is currently running through the ARCore Depth API – which is probably based on the research by Flynn et al. ).

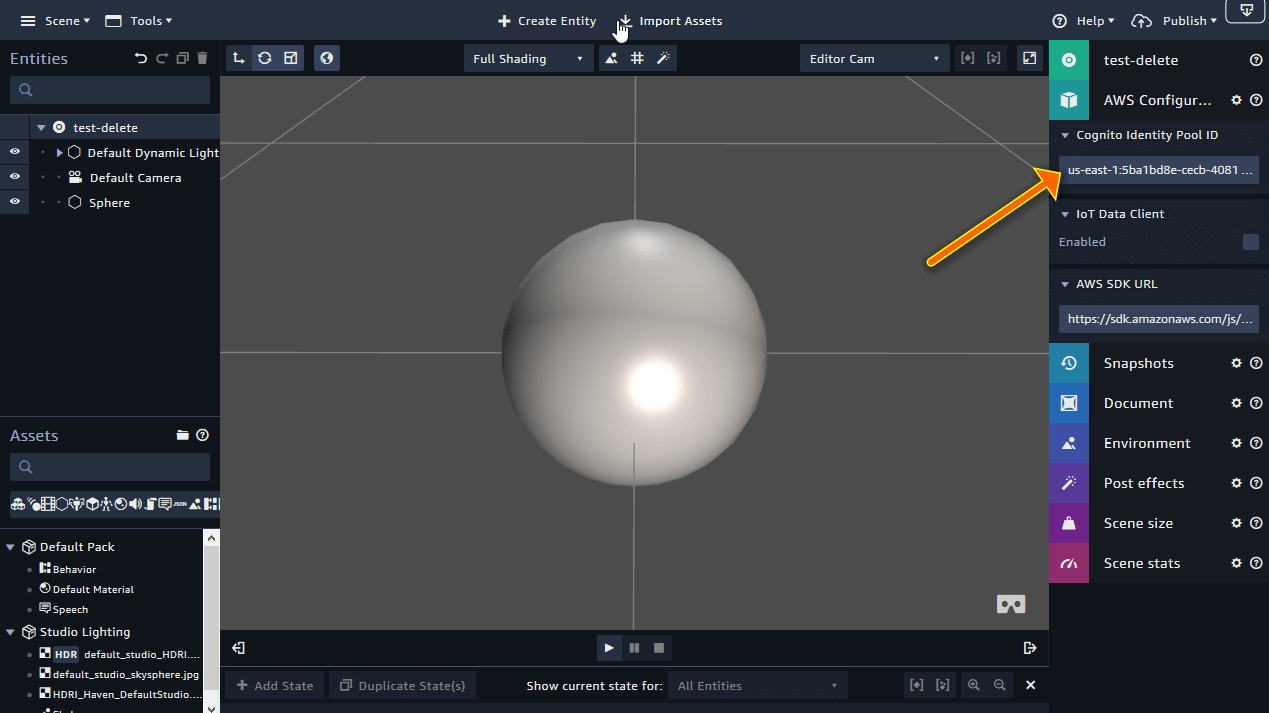

But what about the second item? Google put a lot of effort into this recently. So, let’s look behind the scenes. How does ARCore estimate HDR (high dynamic range) lighting and reflections from the camera image?

Remember that ARCore needs to scale to a variety of smartphones; thus, a requirement is that it also works on phones that only have a single RGB camera – like the Google Pixel 2.

You must be logged in to post a comment.