A few days ago, Microsoft has released .NET Standard 2.0, which is the new dreams-come-true platform for libraries. Additionally, Portable Class Libraries (PCL) have since also been deprecated. Therefore, it’s about time to port my existing libraries.

In addition, also Visual Studio 2017 Version 15.3 with full support for .NET Standard 2.0 was released, as well as the latest Windows 10 Fall Creators Update Preview SDK. So, let’s get started!

.NET Standard vs. UWP

However, it turns out that UWP doesn’t yet support .NET Standard 2.0. For the UWP platform, the latest supported .NET Standard version is still 1.4, which is considerably less powerful.

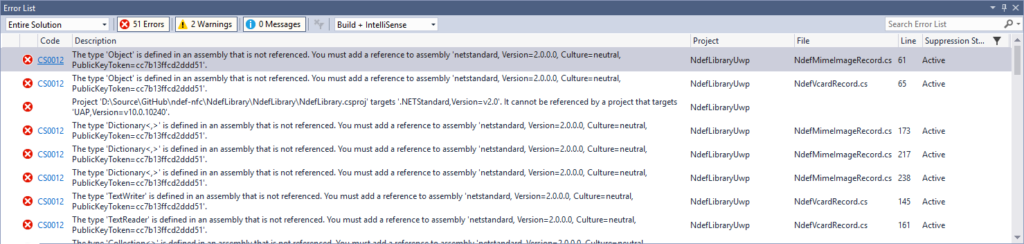

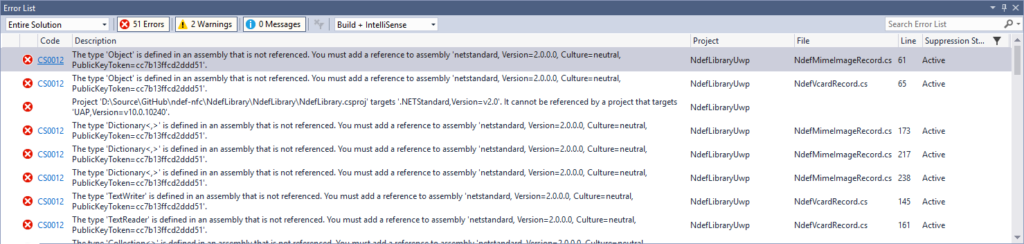

Attempting to reference a .NET Standard 2.0 library from an UWP app leads to errors, including the following that gives it away:

Project '...' targets '.NETStandard,Version=v2.0'. It cannot be referenced by a project that targets 'UAP,Version=v10.0.10240'.

Upgrading the UWP project to the latest SDK Preview for the Windows 10 Fall Creators Update Build 16257 doesn’t change anything; both the SDK and the new Visual Studio 2017 version 15.3 can not yet use .NET Standard 2.0 in conjunction with UWP. According to Microsoft, that will be coming soon with the next UWP version.