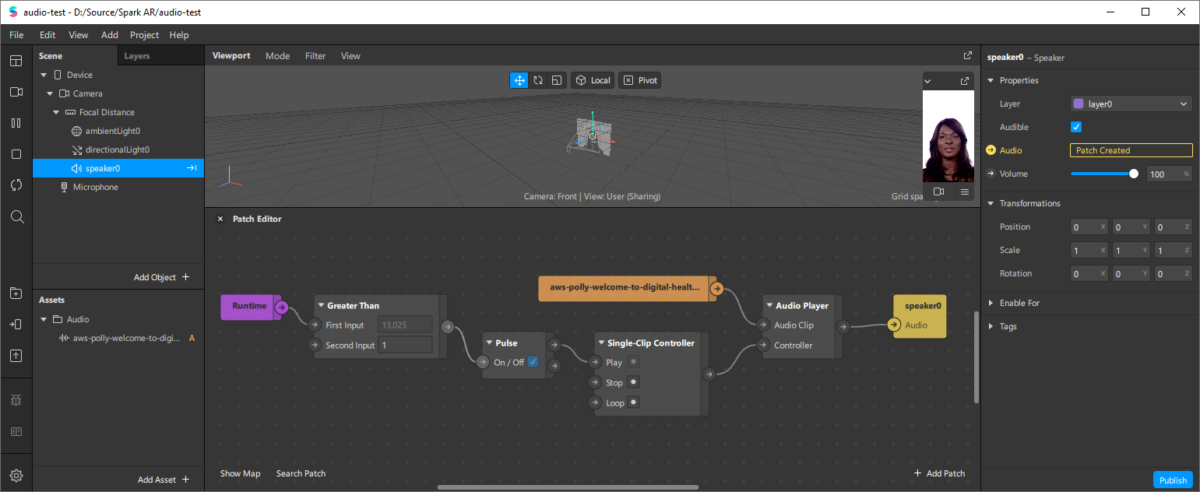

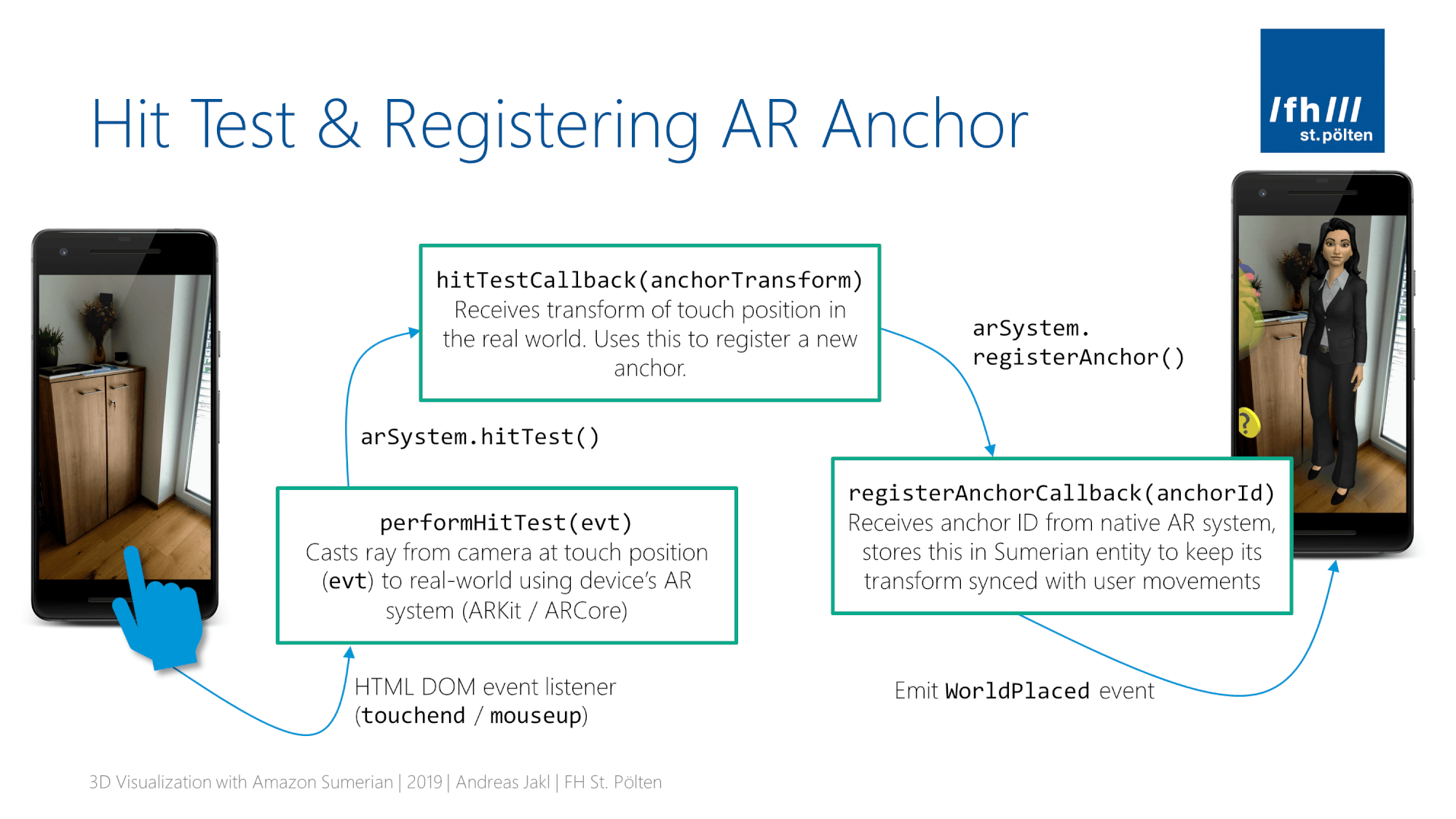

How to (re)-position the virtual objects in the real world in an Augmented Reality experience – while still having an interactive scene? Elegantly guide your users through the placement process.

The official AR tutorial from Amazon contains a simple script: by tapping anywhere in the scene, it instantly moves the objects to that position. However, for the Digital Healthcare Explained app, I needed a more flexible behavior:

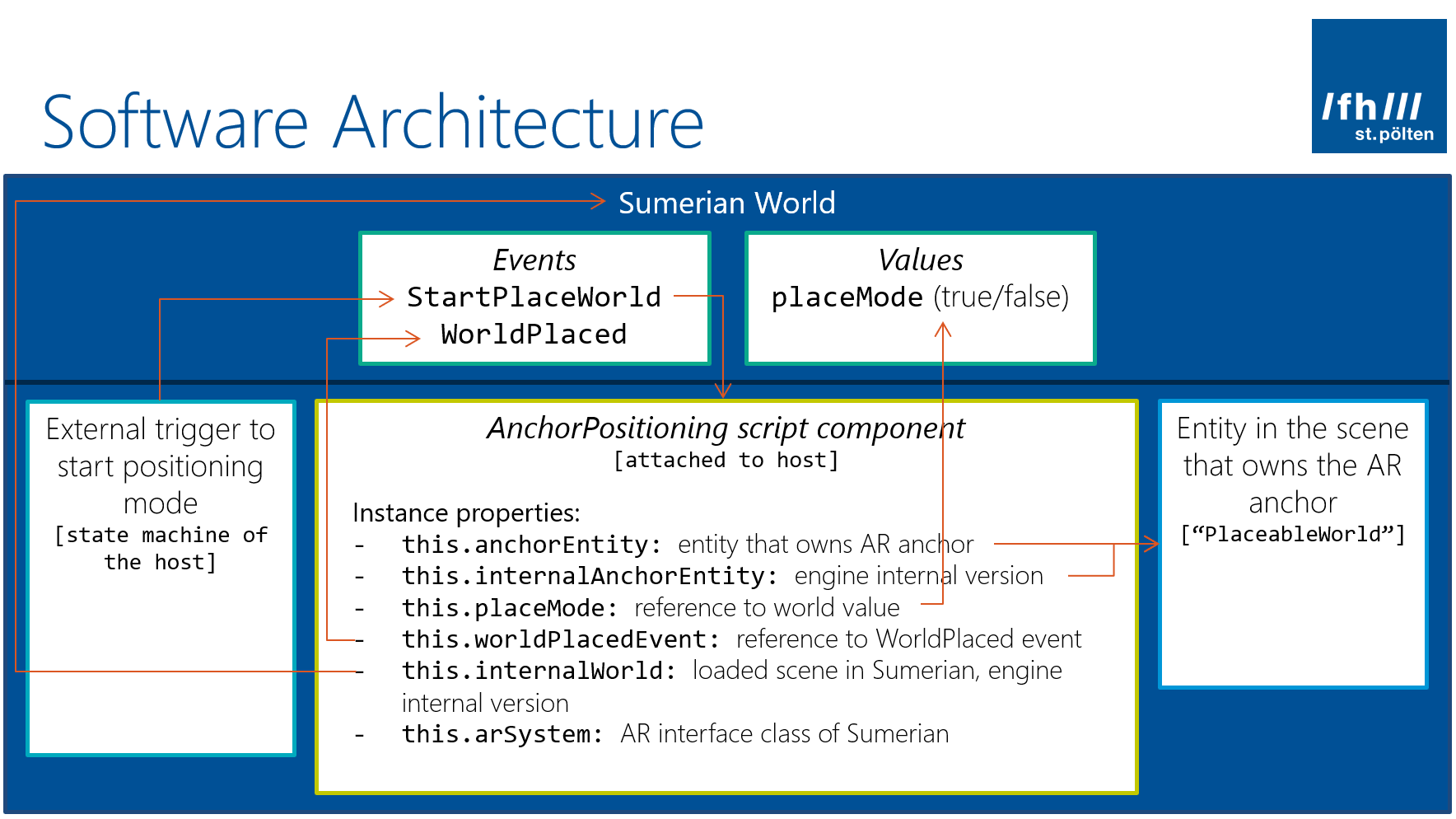

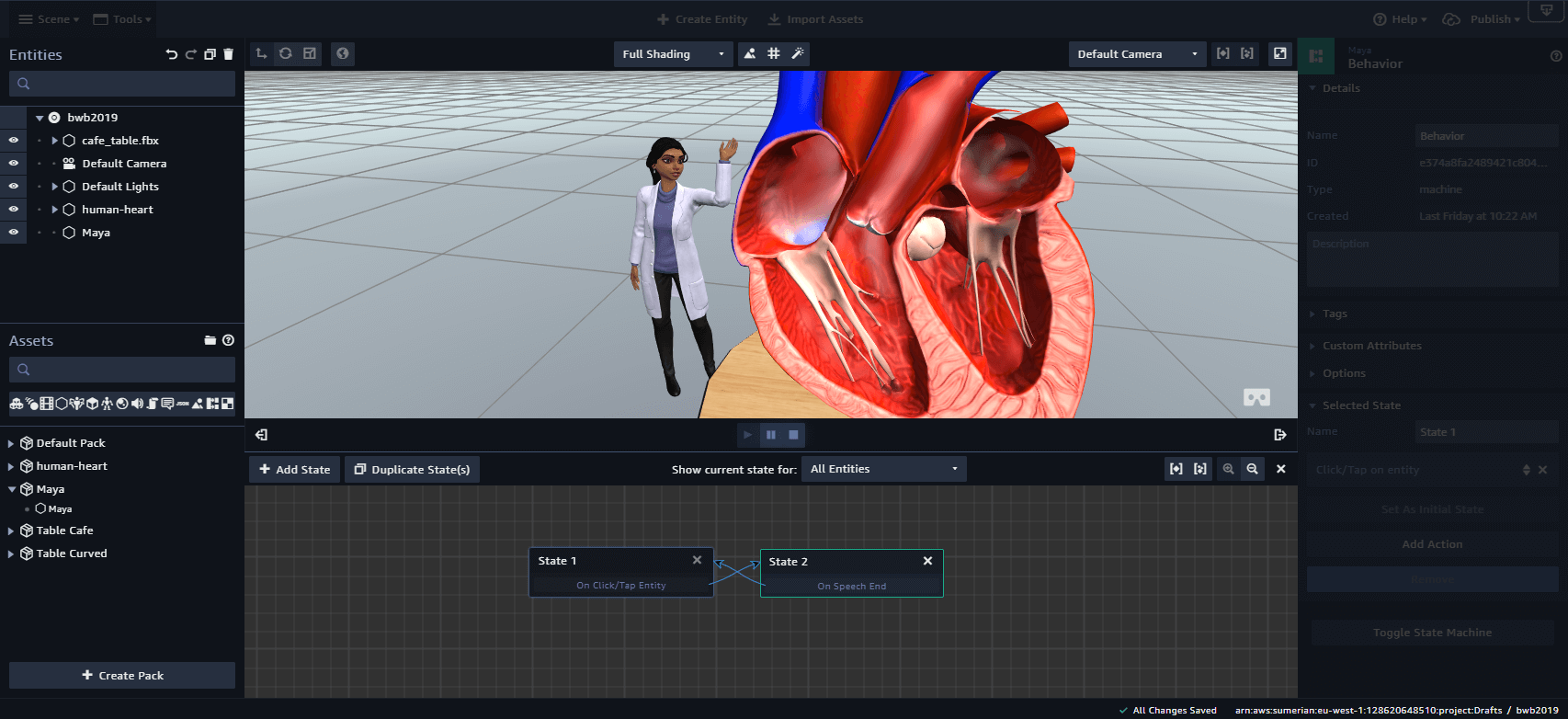

- Activate placement mode by tapping on a specific object in the 3D scene. In this case, I decided that tapping the host avatar triggers placement mode.

- The host then explains what to do: tapping on another surface moves the host and related objects. Guide users through the process. The Sumerian hosts are ideal to explain the process.

- The user taps on a real-world surface in the AR scene.

- Next, the scene contents move, the anchor updates and the host confirms.

New ES6 Based Scripting

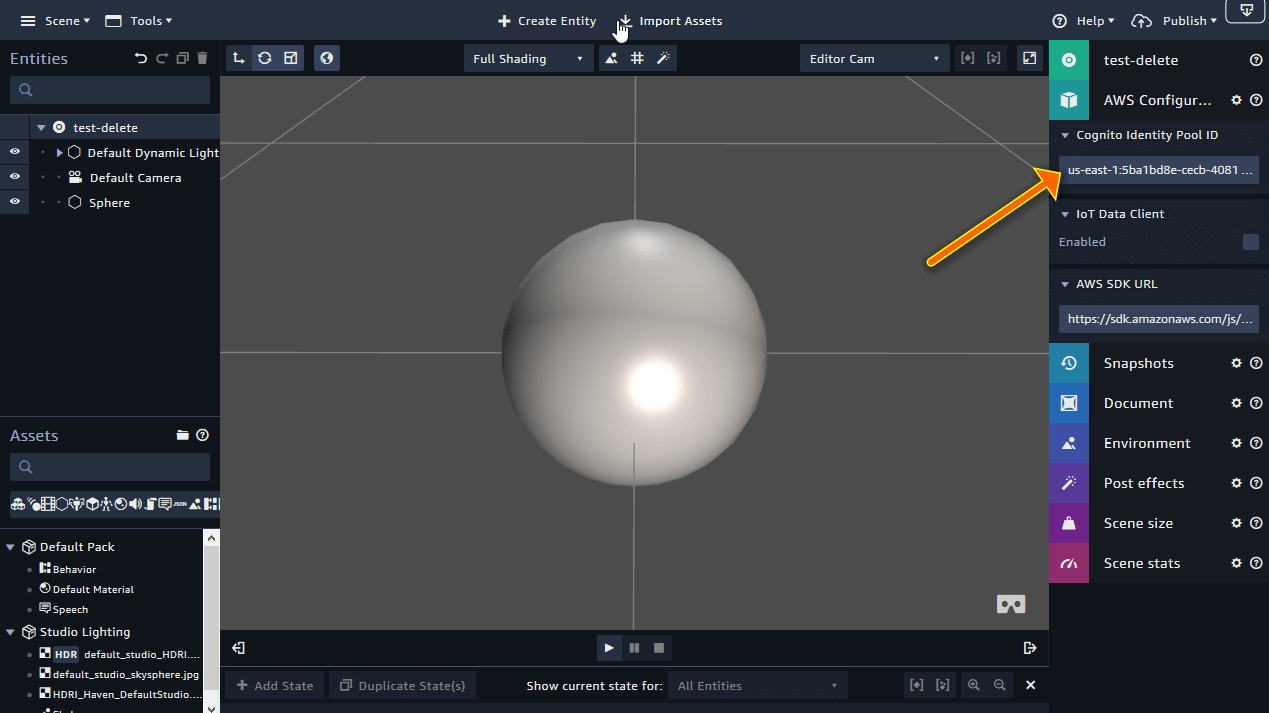

Additionally, Amazon Sumerian is evolving its scripting language. A major upgrade to ES6 is underway. It’s fully based on classes and fits better into the actions and state machines used in other places of Sumerian. The new APIs are still marked as “Preview”. However, the old APIs are already called “Legacy” or “Old Script Format”.

While documentation for the new Sumerian Engine APIs directly is already available, it’s very brief and doesn’t contain many examples. The official tutorials are still based on the legacy API.

I decided to re-write the script using the new APIs. It involves calling a lot of internal parts of Sumerian. Thus, it’s a lot more complex than all other examples for the new API currently out there. However, it’s interesting to dig more into the internals of how a modern, web-based AR environment works.