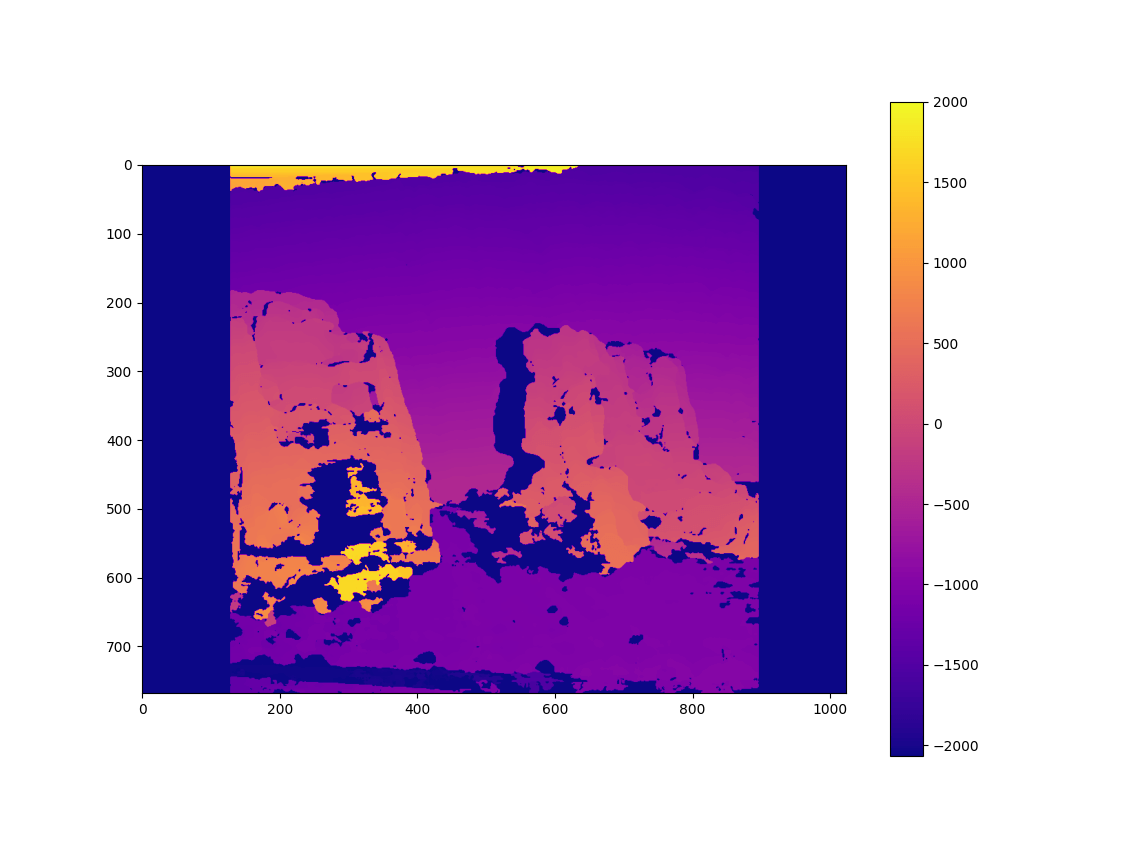

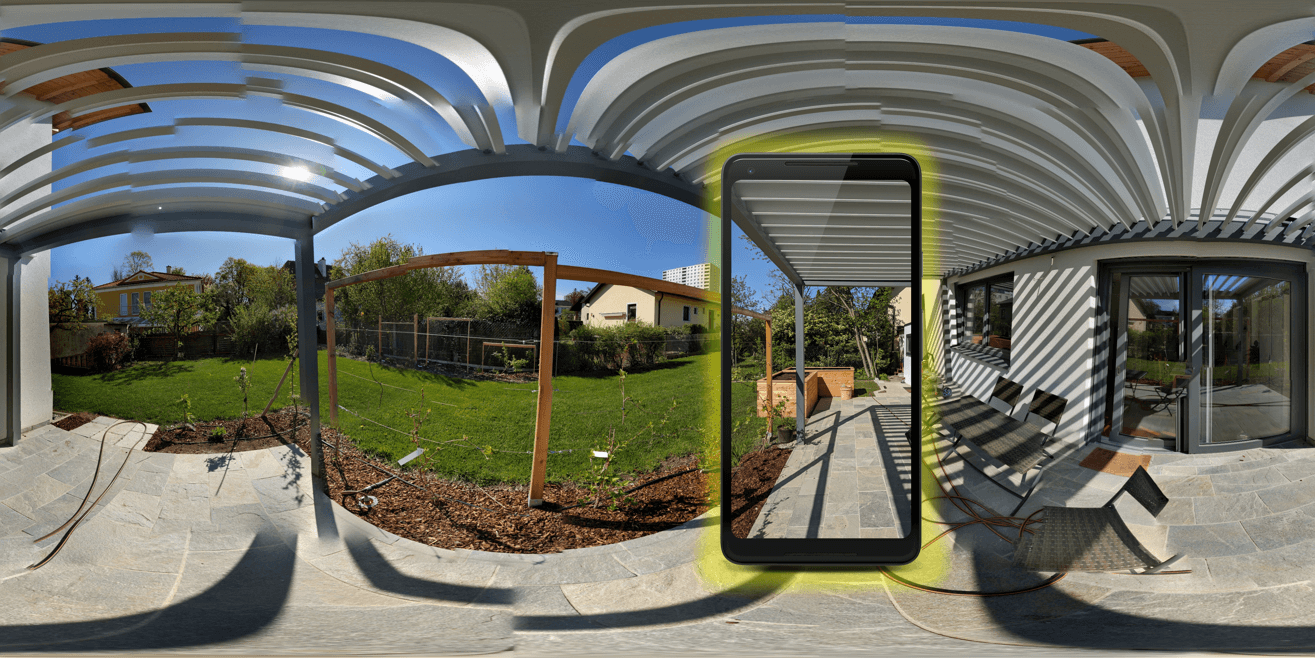

In the final part, let’s look at how we can generate and use the AR depth maps through Unity’s AR Foundation. In the previous part, we tested the ready-made example. Now, it’s time to write code ourselves.

In this case, I’m using Unity 2021.1 (Alpha) together with AR Foundation 4.1.1 to make sure we have the latest AR support & features in our app. But as written in the previous article, Unity 2020.2 should be sufficient.

I’ve tested the example on Android (Google Pixel 4 with Android 11 & ARCore), but it should work fine also on iOS with ARKit.

You can download the full, final AR Foundation Depth Map sample from GitHub. I’ve released the project under MIT license.

Project Setup

First, configure the project for AR Foundation. I won’t go into too many details here, as the official documentation is quite good on that:

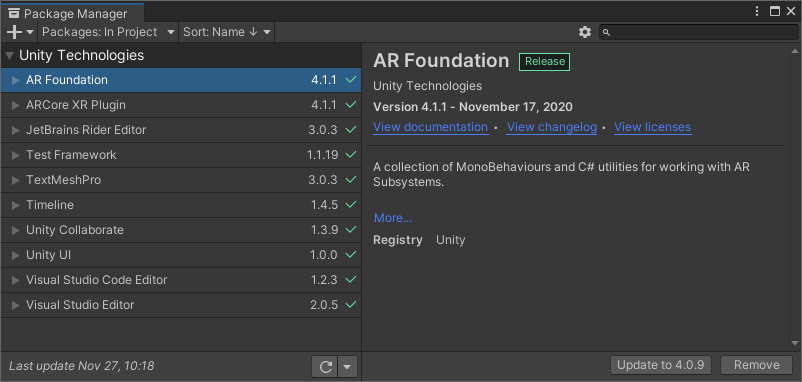

- XR Plug-in management: activate the management in the project settings. Additionally, enable the ARCore Plug-in provider. To check if everything was installed, open Window > Package Manager. You should see both AR Foundation as well as ARCore XR Plugin with at least version 4.1.1.

- Android player settings: switch to the Android build platform, uncheck multithreaded rendering, remove Vulkan from the rendering APIs, make sure the package name is personalized and finally set the minimum API level to at least 24 (Android 7.0).

- Scene setup: add the required prefabs and GameObjects to your scene. Right-click in the hierarchy panel > XR >

XR Session. Also add theXR Session Origin.

By default, the AR depth map is always returned in Landscape Right orientation, no matter what screen orientation your app is currently in. While we could of course adapt the map to the current screen rotation, we want to keep this example focused on the depth map. Therefore, simply lock the screen orientation through Project Settings > Player > Resolution and Presentation > Orientation > Default Orientation: Landscape Right.

You must be logged in to post a comment.