Updated: December 12th, 2022 – changed info from Microsoft LUIS to Microsoft Azure Cognitive Services / Conversational Language Understanding.

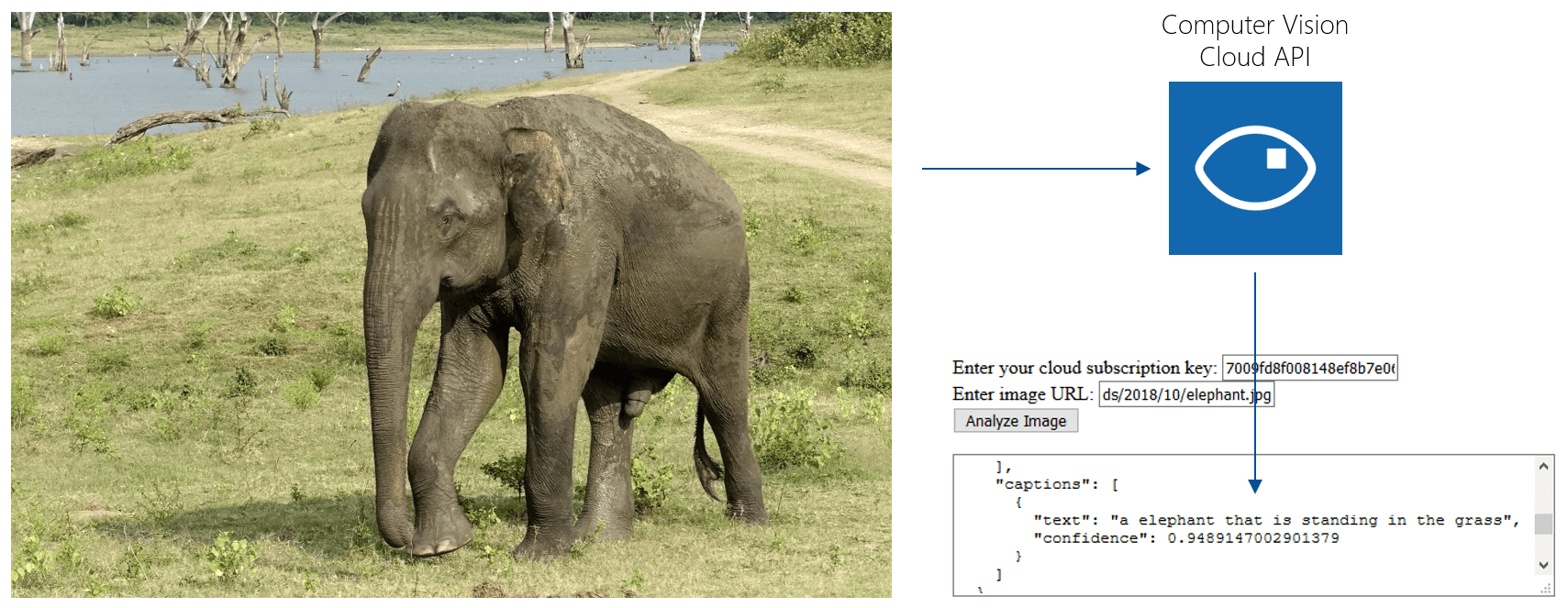

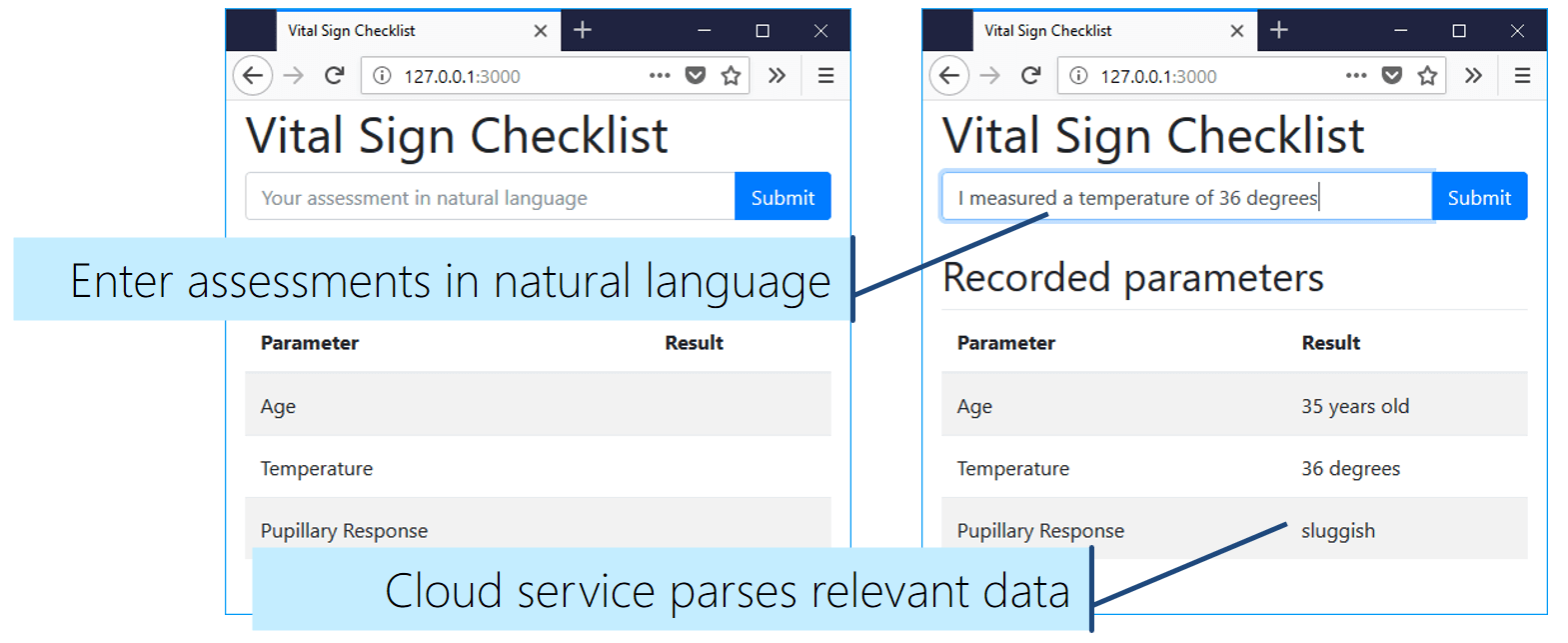

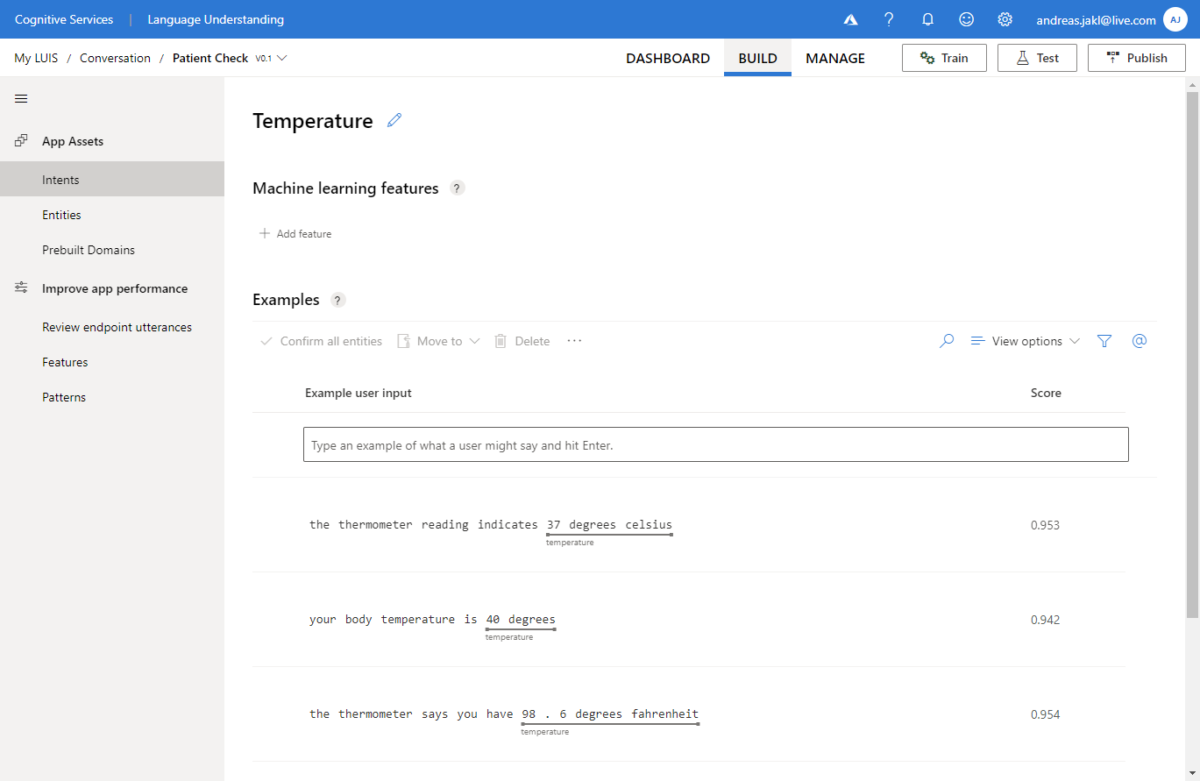

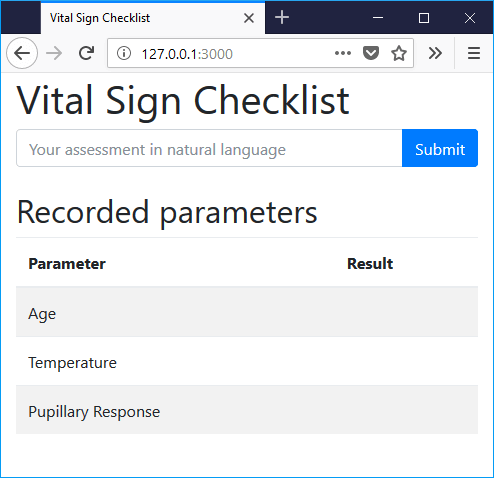

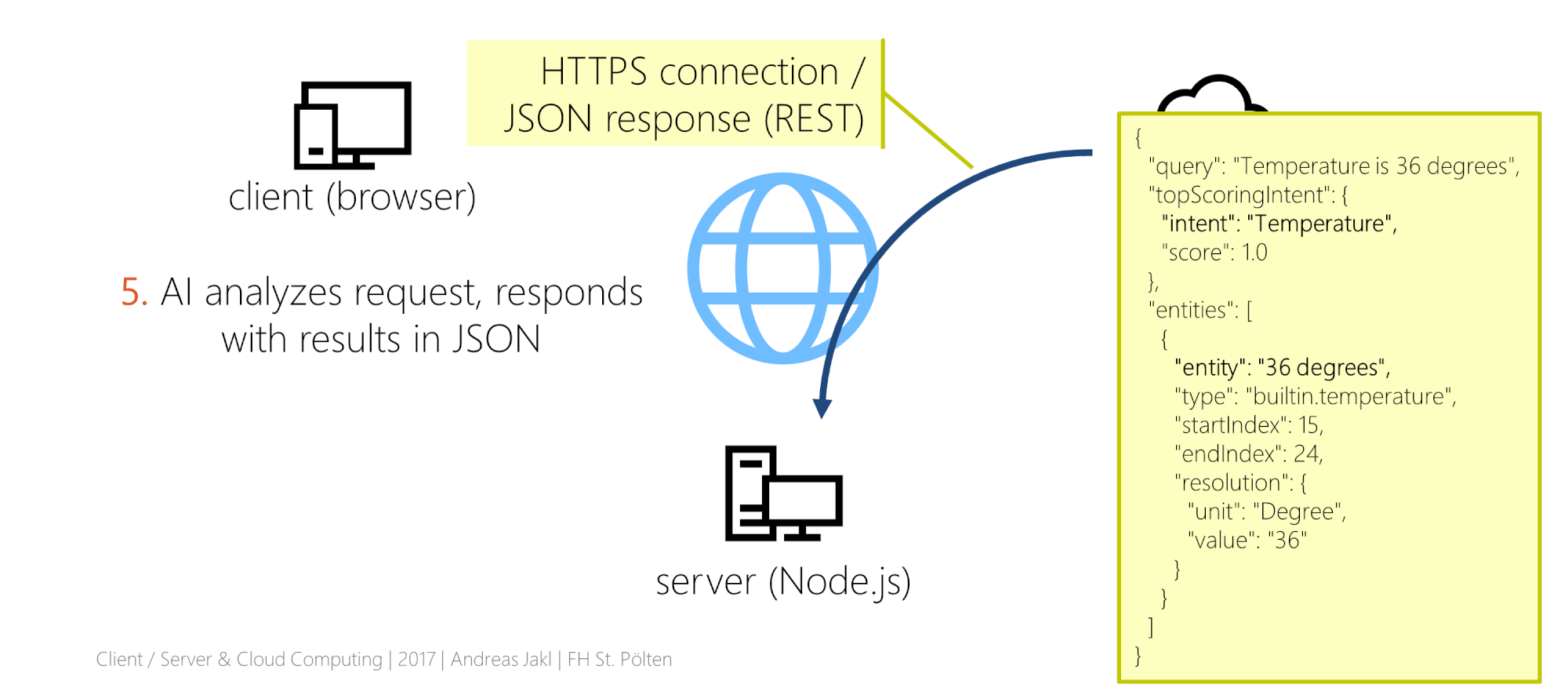

The vision: automatic checklists, filled out by simply listening to users explaining what they observe. The architecture of the sample app is based on a lightweight architecture: HTML5, Node.js & the Microsoft Conversational Language Understanding Cognitive service in the cloud.

Such an app would be incredibly useful in a hospital, where nurses need to perform and log countless vital sign checks with patients every day.

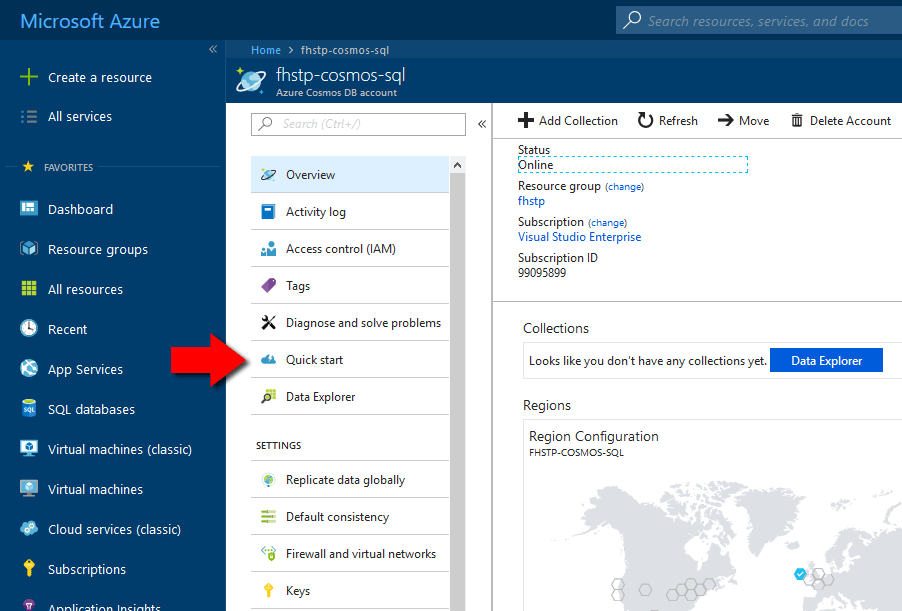

In part 1 of the article, I’ve explained the overall architecture of the service. In this part, we get hands-on and start implementing the Node.js-based backend. It will ultimately handle all the central messaging. It communicates both with the client user interface running in a browser, as well as the Microsoft LUIS language understanding service in the Azure Cloud.

Creating the Node Backend

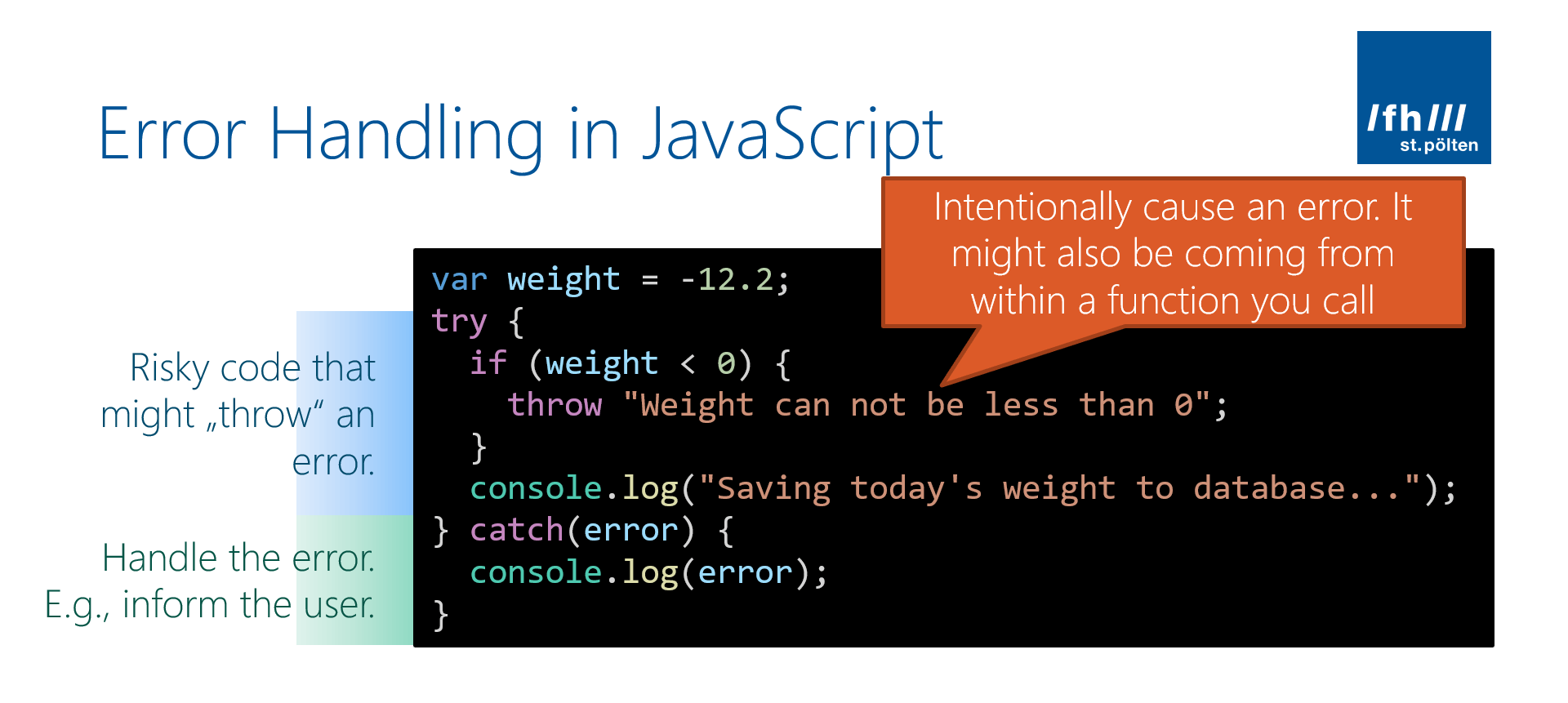

Node.js is a great fit for such a service. It’s easy to setup and uses JavaScript for development. Also, the code runs locally for development, allowing rapid testing. But it’s easy to deploy it to a dedicated server or the cloud later.

I’m using the latest version of Node.js LTS (currently version 18) and the free Visual Studio Code IDE for editing the script files.