Creating an Alexa-hosted skill is a fantastic way to start developing for voice assistants. However, you will eventually face issues that you need to debug in code. Alexa offers local skill debugging through Visual Studio Code but setting it up is a bit tricky. This guide will take you through the necessary steps.

Skill Environment

This guide focuses on a Python-based skill and uses Windows as a local dev environment. Most also applies to other environments.

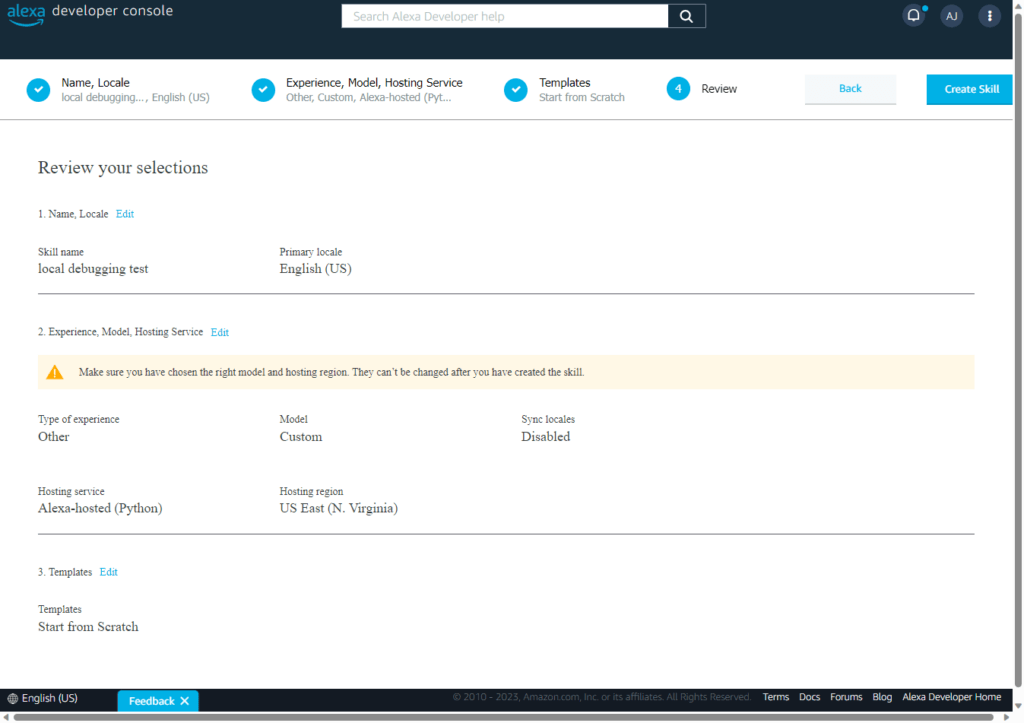

I’ll start with a blank skill. First, create the skill in the Alexa Developer Console. The skill name I’m using in this example is “local debugging test”. The “type of experience” is “Other”, with a “Custom” model, as I’d like to start with a minimal blank skill. In the “Hosting services” category, choose the “Alexa-hosted (Python)” category. In the last step about templates, stick with “Start from Scratch”, which will give you a minimal Hello World-type voice interaction. The following screenshot summarizes the settings:

You must be logged in to post a comment.