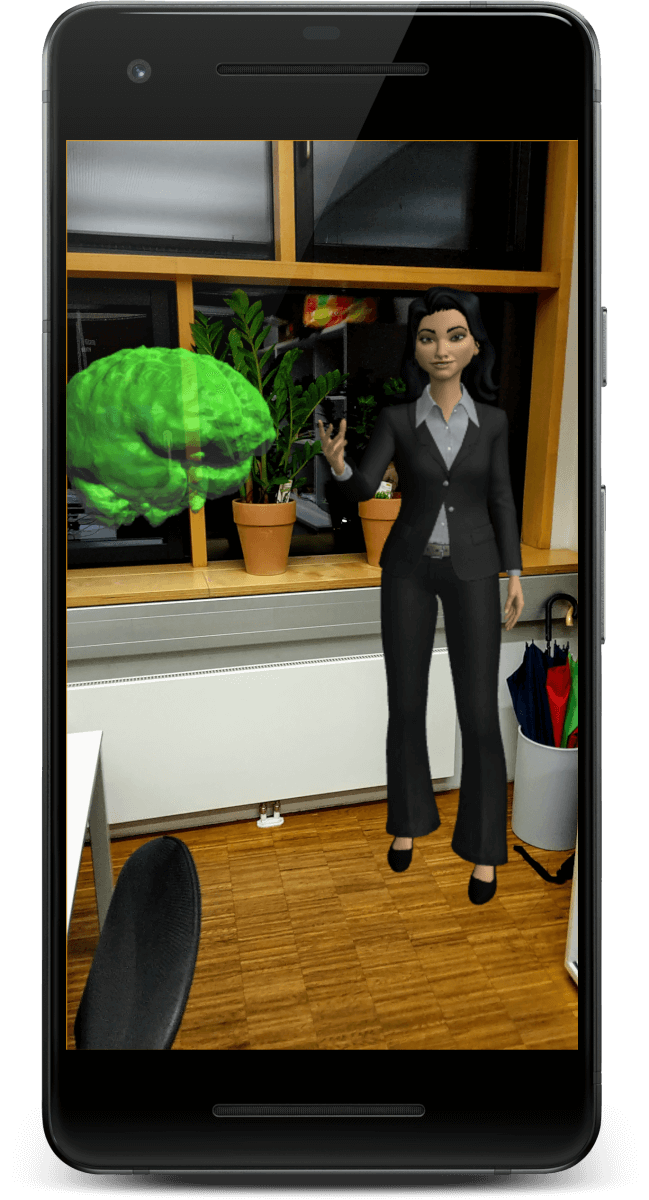

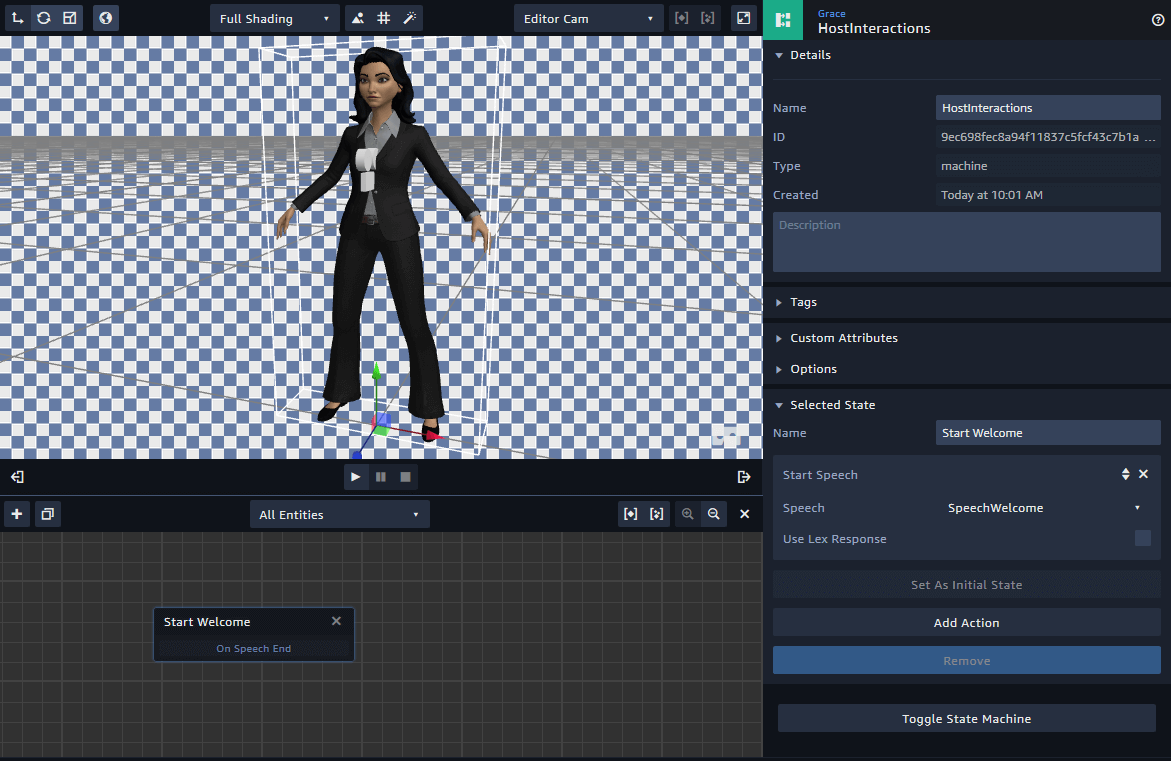

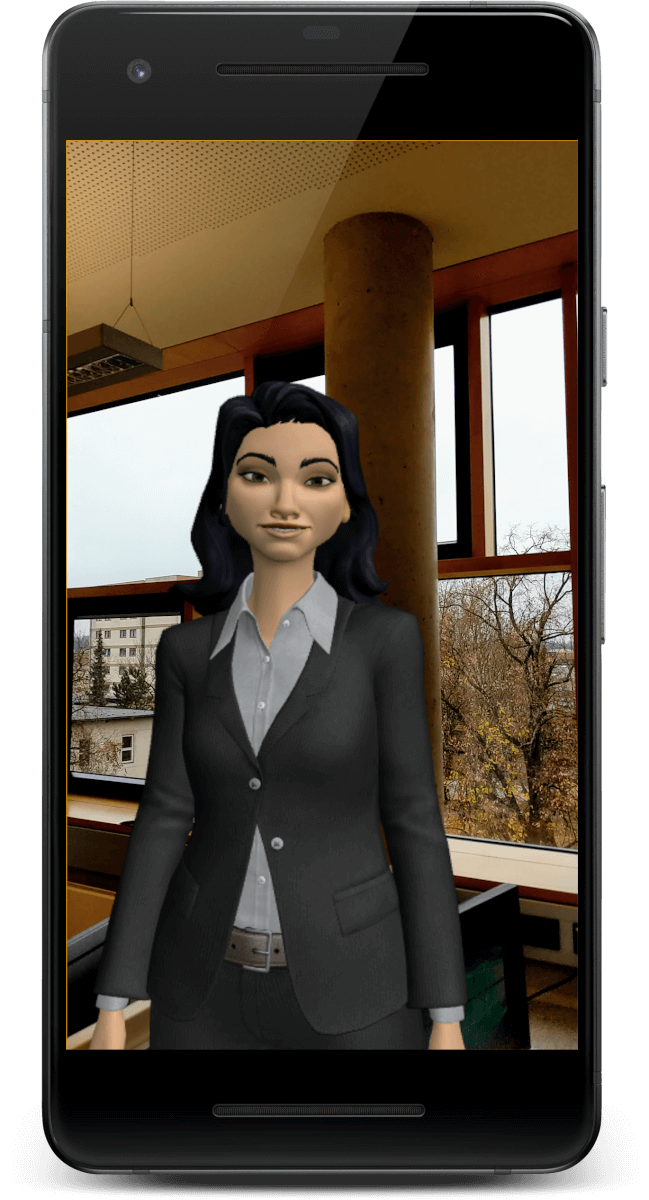

Integrate the real world into your Amazon Sumerian AR app. Plus: place virtual content into the user’s environment. Learn how to anchor multiple 3D models that have a fixed spatial relationship.

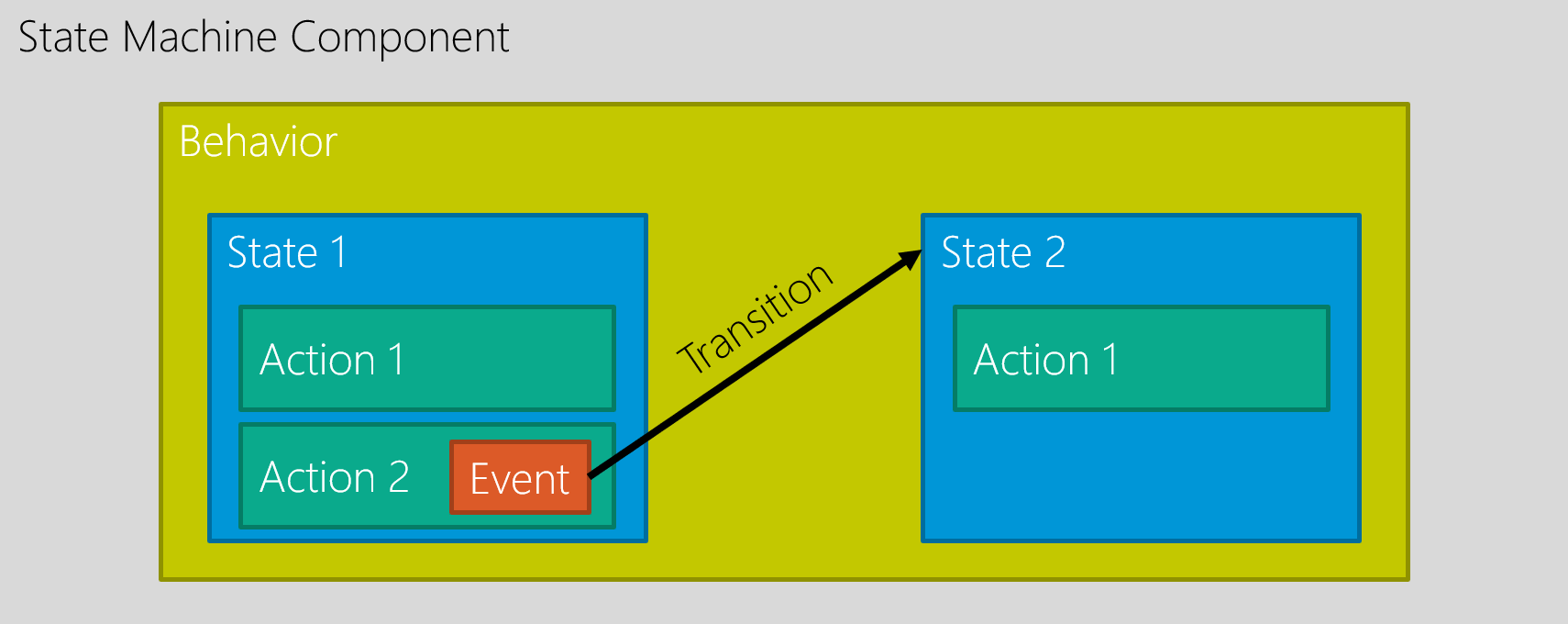

This article builds on the foundations of the AR project setup in part 1, as well as extending the host with speech & gestures in part 2.

Import Custom 3D Models

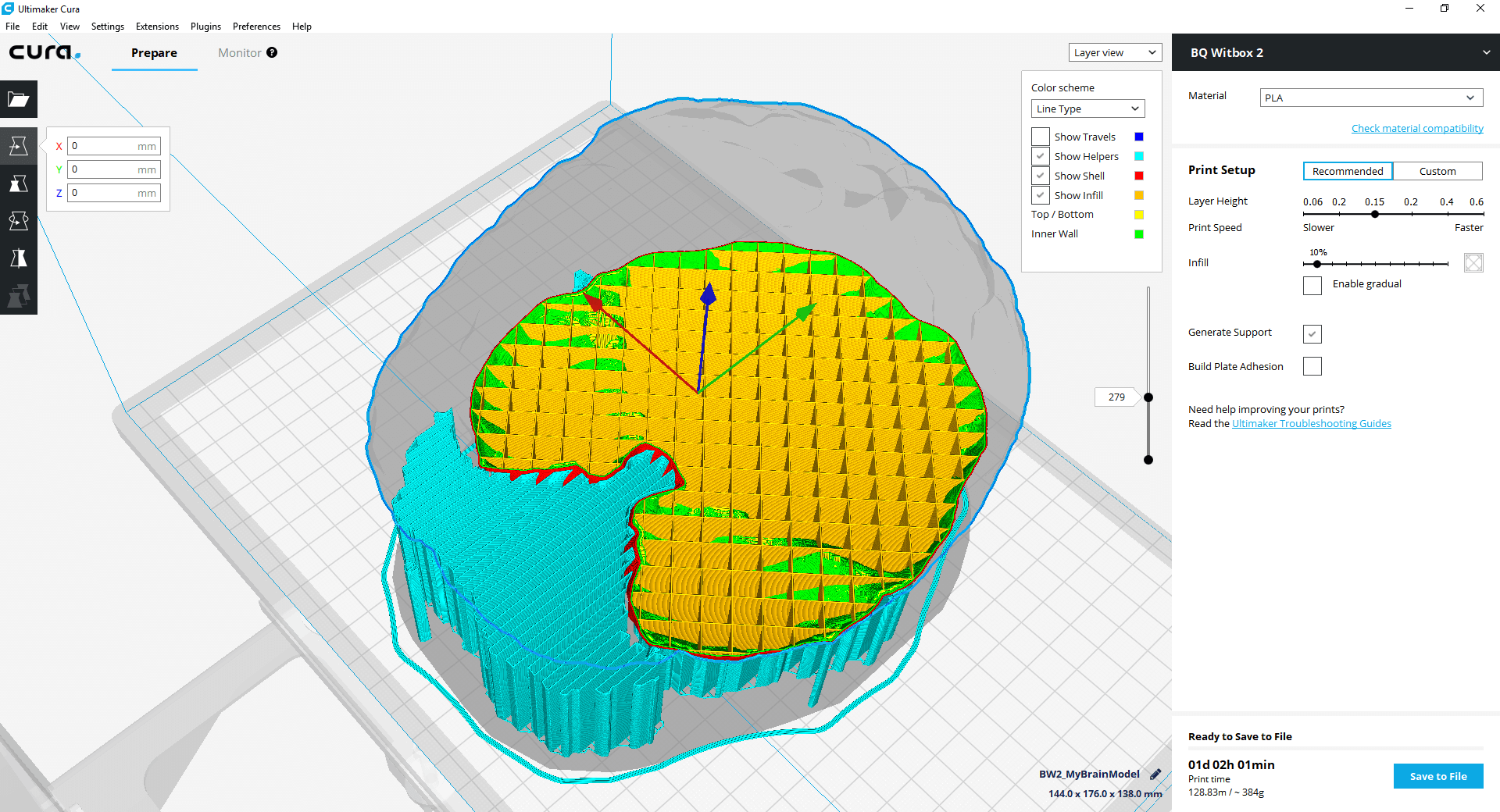

While Sumerian comes with a few ready-made assets, you will often need to add custom 3D models to your scene as well. Currently, Sumerian supports importing two common file types: .fbx (also used by Unity and Autodesk software) and .obj (very wide-spread and common format).

Simply drag & drop such a model from your computer to your assets panel. Alternatively, you can also use the “Import Assets” button in the top bar and then use “Browse” to choose the file to upload.

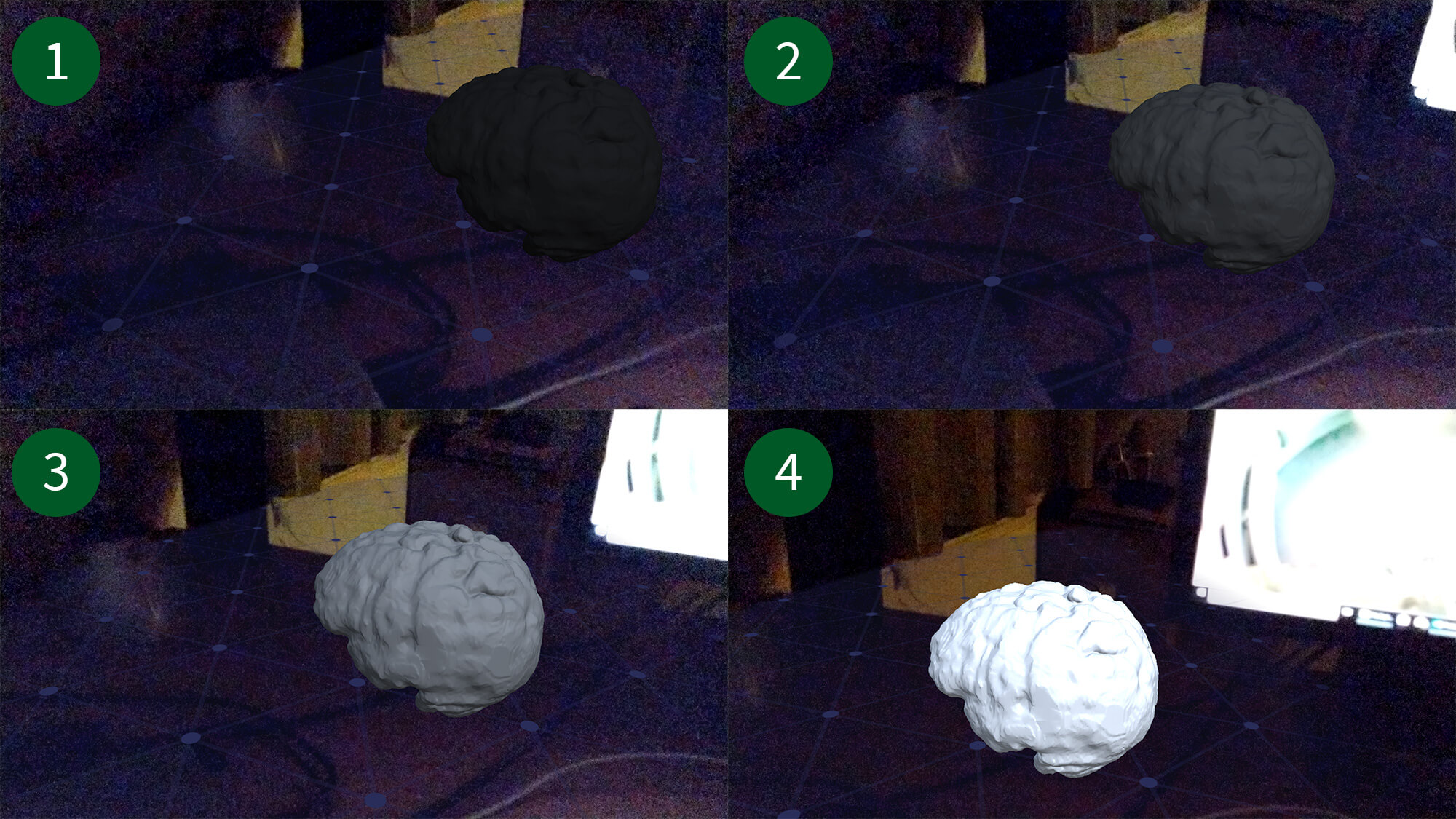

Where to get these 3D models? Either you create them yourself using Blender, Maya or any other tool. Alternatively, go to great free portals like Google Poly and Microsoft Remix 3D. These objects are usually low-poly and therefore well-suited for mobile phones.

You must be logged in to post a comment.