Integrate the real world into your Amazon Sumerian AR app. Plus: place virtual content into the user’s environment. Learn how to anchor multiple 3D models that have a fixed spatial relationship.

This article builds on the foundations of the AR project setup in part 1, as well as extending the host with speech & gestures in part 2.

Import Custom 3D Models

While Sumerian comes with a few ready-made assets, you will often need to add custom 3D models to your scene as well. Currently, Sumerian supports importing two common file types: .fbx (also used by Unity and Autodesk software) and .obj (very wide-spread and common format).

Simply drag & drop such a model from your computer to your assets panel. Alternatively, you can also use the “Import Assets” button in the top bar and then use “Browse” to choose the file to upload.

Where to get these 3D models? Either you create them yourself using Blender, Maya or any other tool. Alternatively, go to great free portals like Google Poly and Microsoft Remix 3D. These objects are usually low-poly and therefore well-suited for mobile phones.

Whenever you download and use a 3D model, make sure you pay attention to the licensing terms. Even if the model is free, you often have to credit the author. This is also the case for the CC-BY license, which is common on Google Poly.

Go ahead and find a suitable 3D model; then, add it to your scene. For this article, I’m using the “Brain Model from MRI” that I uploaded to Google Poly.

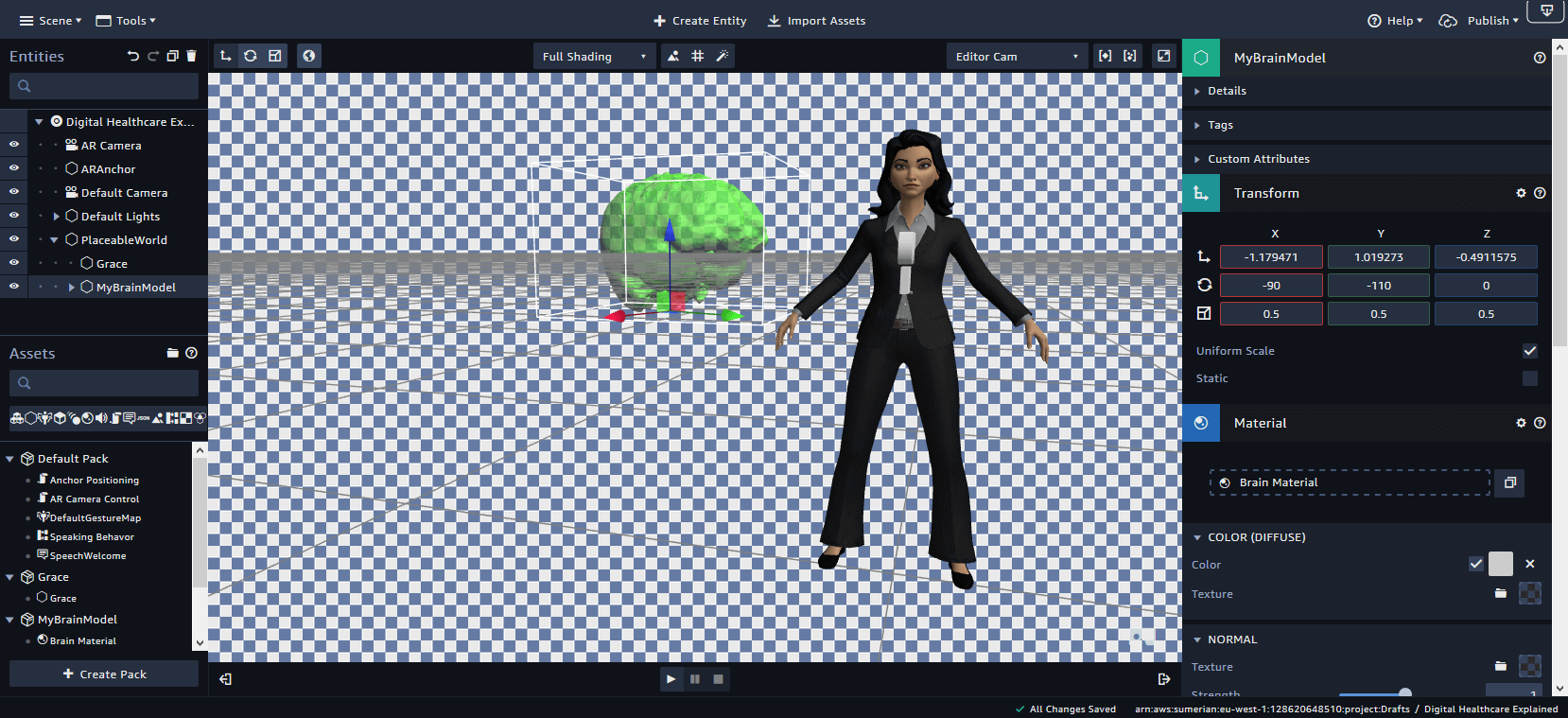

This is what it looks like imported to Amazon Sumerian. I down-scaled the object a bit, moved it farther up and rotated it. Additionally, I changed the material color to a transparent green.

Position Items in the Real World

To place an item in the real world, you need to attach an anchor to it. The respective AR subsystem of Android or iOS then ensures that the 3D object always stays in the same spot in the user’s surroundings.

The Amazon tutorials (Google ARCore, Apple ARKit) include the necessary steps to attach a script called “Anchor Positioning” to the entity you want to place.

Essentially, you need to add a script component to your entity. Then create a new script, for example called “Anchor Positioning”. The source code is just a few lines and you can copy and paste it from the tutorial.

Whenever the user taps on the screen, the phone will now place the 3D object at that position. Note that this only works reliably after the phone found real-world planes to place the object on – e.g., the floor or a table.

It can take a few seconds until the phone recognizes these planes in the real world. During these seconds, the user should be animated to move the phone around.

ARCore and ARKit can’t make sense of the environment if the phone is static. This would require a depth camera like from the Microsoft HoloLens – which current smartphones lack. Therefore, in a more polished and final version of your app, I’d recommend that you animate the user to move the phone around to scan the room.

Object Hierarchy

Often, you need to anchor multiple characters at once. For example, your host explains a topic based on other 3D models. Of course, you could let the user position the host on the floor and then the 3D model on the table. However, that’d be rather complicated for the user. Make sure the user interaction required to get the first meaningful results is as simple as possible.

Instead, it’s easier if the user just taps once on the screen to position your whole AR environment. The major point of reference is where the host stands on the floor in your room. Furthermore, the host and other 3D models are tied together. That way, you can also be sure that the spatial relationship between objects is always the same. Otherwise, how could the host realistically explain the object if the user places it behind the host? After all, you can never be sure how the user interacts with your app.

Scene Setup

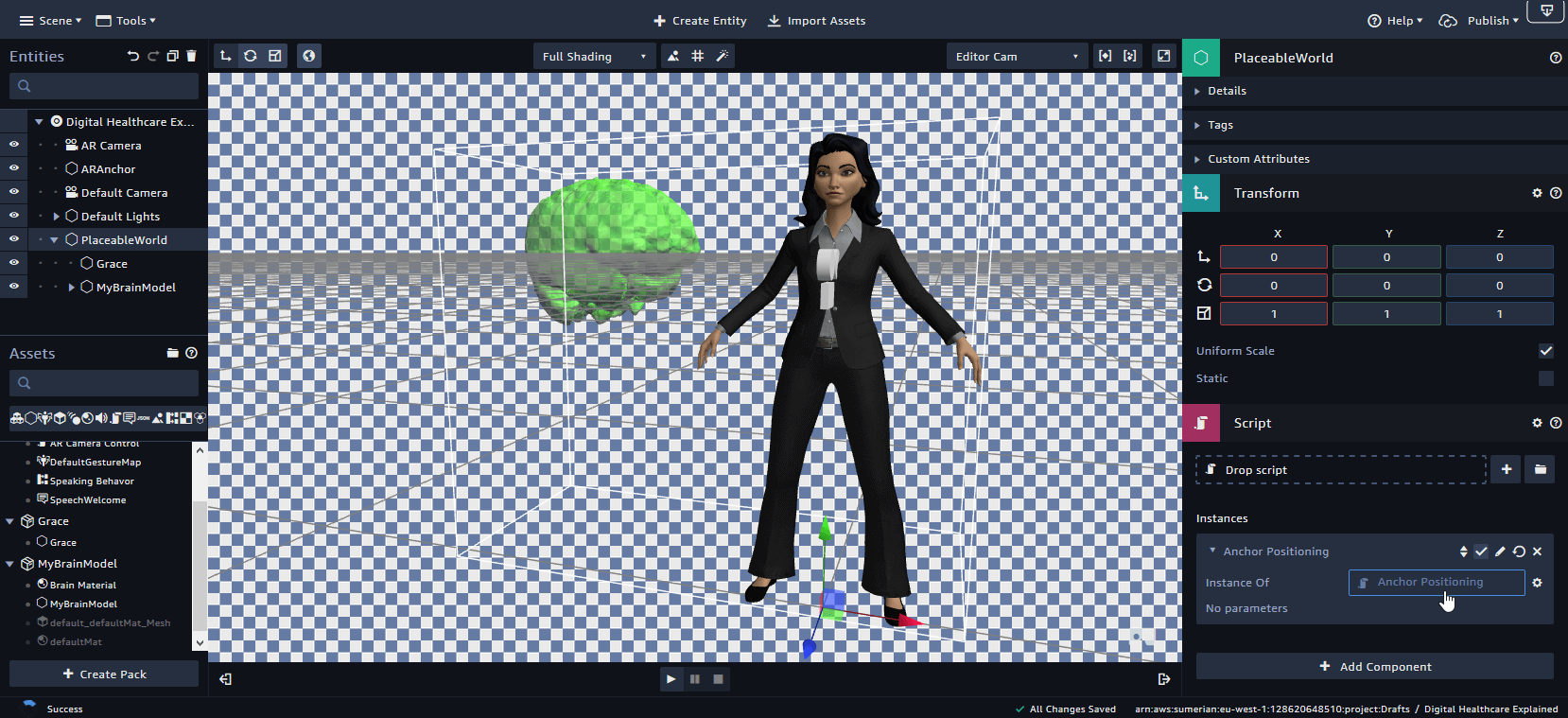

How to set up the scene accordingly in Sumerian? In the editor, create a new empty entity through “+ Create Entity” in the top menu. Call it “PlaceableWorld” through the inspector.

Then, drag and drop the host and your other 3D models onto the “PlaceableWorld” in the “Entities” panel. This makes them children of that empty entity. This is also visually indicated in the editor: these elements are below the parent, as well as slightly indented.

Now, add the “Anchor Positioning” script to the “PlaceableWorld” entity. Don’t place it directly on the host. For reference, this is what your scene would look like, with a host and the brain 3D model placed next to it:

Anchor Positioning Results

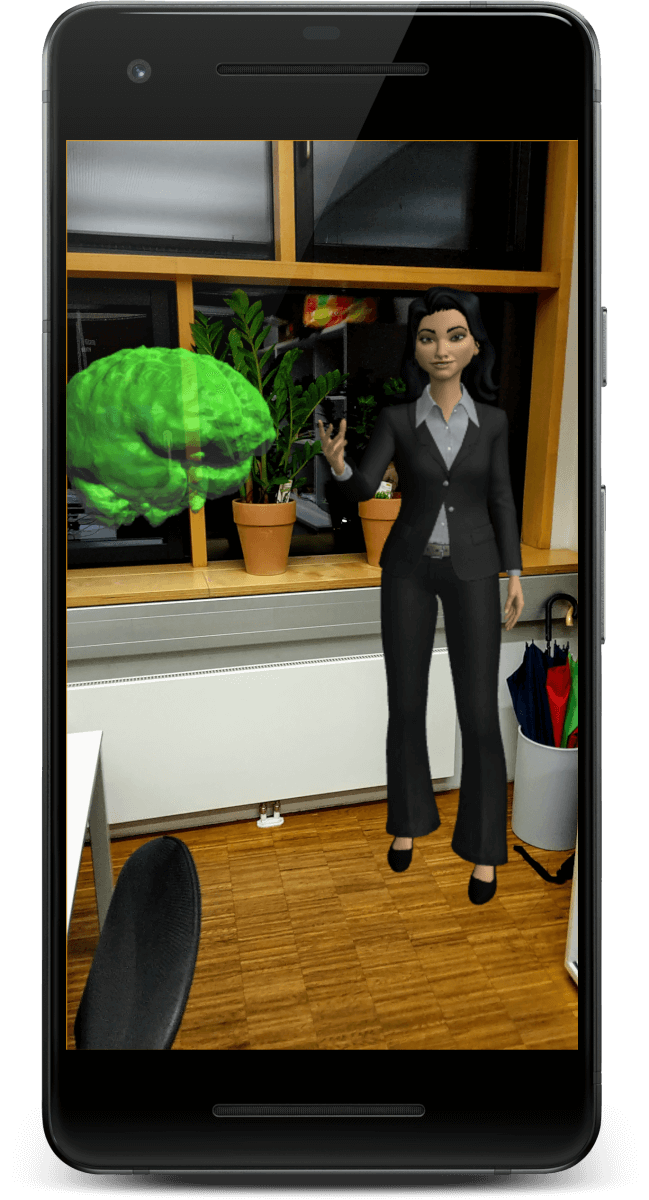

This is what the scene looks like on the phone in the current state. The host has been placed on the floor through a tap on the screen. The custom 3D model is next to the host, always in the same spatial relationship.

Of course, it could happen that the user places the host very close to a wall and the custom 3D model would appear to be behind the wall. Resolving these situations automatically would require some additional scripting, along with specified constraints for alternate positions. Both ARKit and ARCore support wall detection in the latest versions. However, as the object is rather small, it’s OK if we do not take this route yet. If the custom 3D model is larger, it’d usually anyway be a better idea to let the user place the object manually.

Coming up next

In the first three parts of the series, we’ve set up the scene. In the following part, we’ll look at user interaction. How does it work to trigger speech for the host when the user taps on the 3D model? A small preview hint: this works with messages sent within the scene. The great news is that you still don’t need to do coding to get that working!

Amazon Sumerian article series:

- Amazon Sumerian & Augmented Reality (Part 1)

- Speech & Gestures with Amazon Sumerian (Part 2)

- Custom 3D Models & AR Anchors in Amazon Sumerian (Part 3)

- User Interaction & Messages in Amazon Sumerian (Part 4)

- Animation & Timeline for AR with Amazon Sumerian (Part 5)

- Download, Export or Backup Amazon Sumerian Scenes (Part 6)