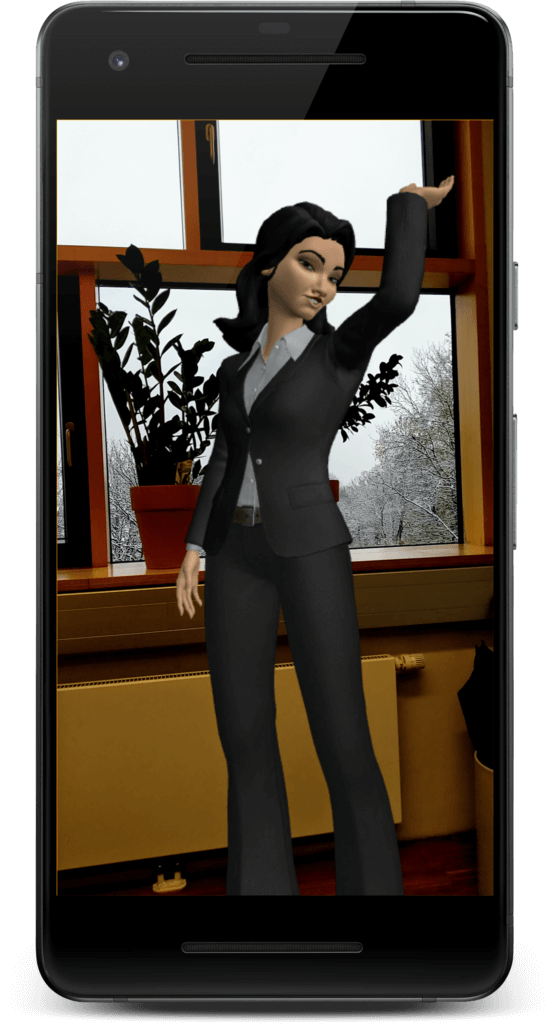

In the first part of the article series, we set up an Augmented Reality app with a host (= avatar). Now, we’ll dive deeper and integrate host interactions. To make the character more life-like, it should look at you. We’ll assign speech files and ensure that the gestures of the character match the spoken content.

But before we set out on these tasks, let’s take a minute to look at some vital concepts of Amazon Sumerian.

Behaviors, State Machines & Events

Unless you want your app to just show a static scene, you’ll need to integrate actions. The trigger for an action could react to interactive user inputs. Alternatively, you define what happens sequentially – e.g., first a new object appears in the scene, then the host avatar explains it.

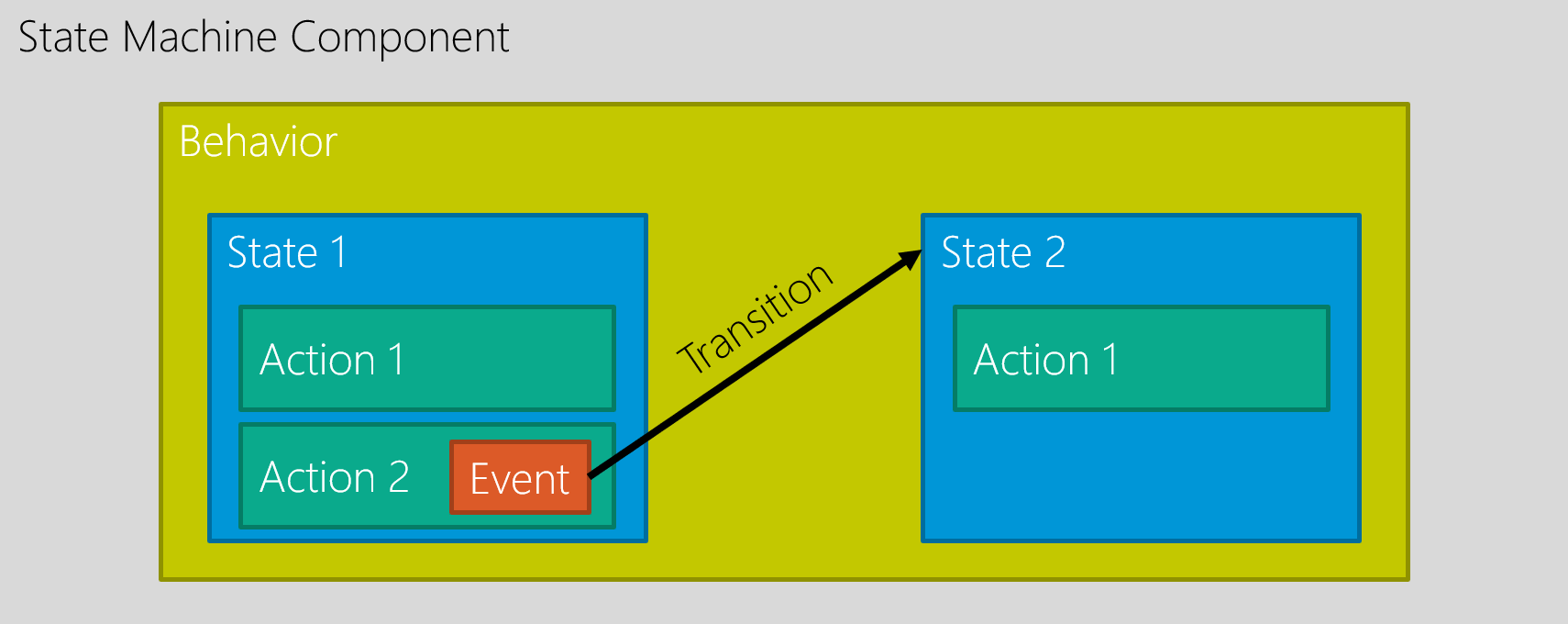

Technically, this is solved using a state machine. Each entity can have multiple different states. A behavior is a collection of these states. States transition from one to another based on actions & their events (= interactions or timing).

Each state has a name: e.g., “Waiting”, “Moving”, “Talking”. In addition, each state typically has one or more actions: e.g., waiting for five seconds, animating the movement of the entity or playing a sound file. Sumerian comes with pre-defined actions. Additionally, you can provide your own JavaScript code for custom or more complex tasks.

These actions can trigger events. Some examples: the wait time of 5 seconds is over, the movement is completed or the sound file finished playing. Using a transition, you can then transition to a different state.

By combining several states together with transitions, you can make entities interact with the user or perform other tasks to ensure your scene is dynamic.

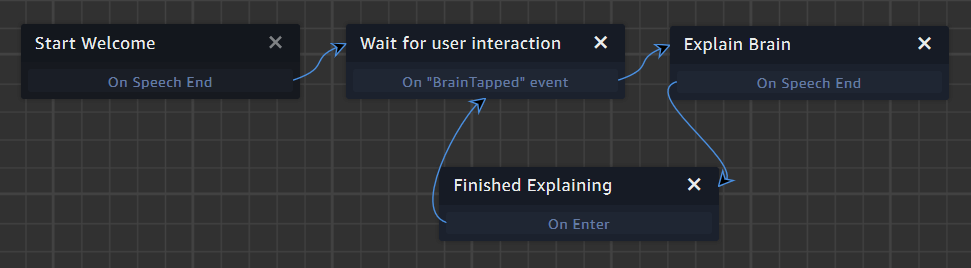

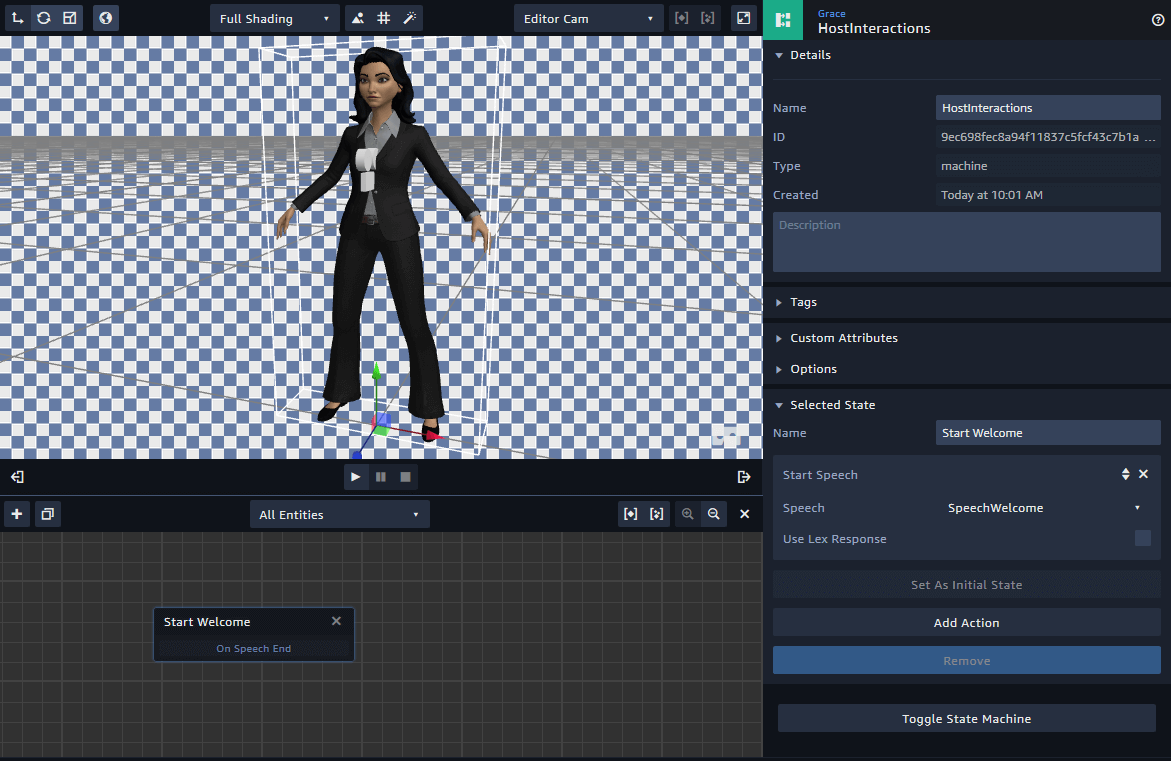

One of the states is set as “initial state” that is active when your app starts – in the state machine editor, it’s shown in a darker color. In the example below, that’s the “Start Welcome” state.

Event Messages

While state machines can change the properties of a single entity, you might want to influence the rest of your scene based on interactions / triggered events. E.g., when the user triggers a light switch, you activate the ceiling lamp.

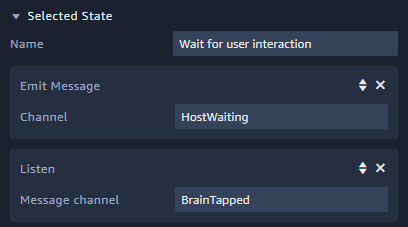

Within a state machine, this is handled by adding an “Emit message” action to a state. In the inspector panel on the right, you can then provide a textual name for the message.

Take a look at the screenshot below. The message “HostWaiting” is emitted when the entity transitions to the “Wait for user interaction” state.

Other entities can then react to this message and trigger their own state transitions. This is done through the “Listen” action as part of a state.

In the screenshot, the host listens / waits for the “BrainTapped” message. When it receives this message through the global message bus, the host entity transitions to another state (see the behavior screenshot above).

A message is called “Channel” in Sumerian. But in the end, it’s simply a short string that you define. You just need to make sure you use exactly the same spelling and upper/lowercase style both when emitting and when listening for events.

Speech Services

Voice is one of the best user interactions in AR and VR worlds. This is especially true until true hand-recognition is possible for future AR headsets.

However, in mobile AR the user needs to hold the phone with one hand. Thus, only the other hand is available for gestures, limiting the fidelity of what you can do on the screen.

Amazon has two main services related to voice:

Hosts in Amazon Sumerian can express emotions and move their lips and hands according to what they say. You can automatically generate basic matching to the text contents automatically. Using a markup language called SSML, you can customize the avatar animations to make the avatar’s movements even more realistic.

Cognito ID Connection

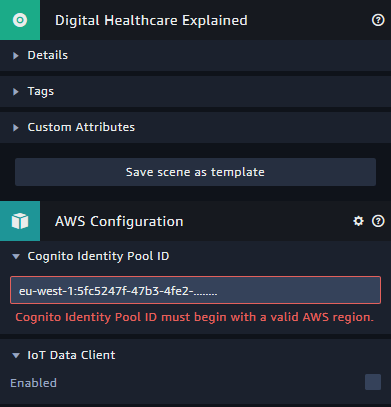

As mentioned in the first article, you need to connect your Amazon Sumerian scene to AWS services through the Cognito ID.

In the entities panel of your scene, click the top-most item that shows your scene name. Next, you will now see the section “AWS Configuration” in the inspector panel. This is where you make the connection through the “Cognito Identity Pool ID”. Paste the ID you generated in part 1 into this text field.

Interacting with Hosts

So far, the host neither looks at you when talking, nor does it move its hands to empathize its speech. Let’s change that.

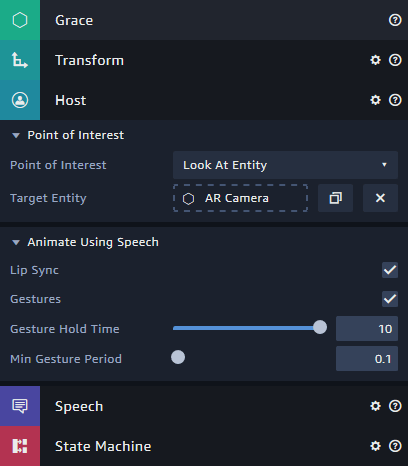

Make the Host Look at You

The host entity has some unique components in the inspector panel. When the user should interact with the host, it’s a good idea to set the “Point of Interest” to “Look at Entity”. Then drag the “AR Camera” from your entities panel to the “Target Entity” – from now on, the host will always look at the user.

Note: in case you’re using the web preview to test your scene, it’s often easier to use the “Default Camera”, as you can move this around in “Play” mode. Switch the active camera in the top right corner of the canvas.

Tell Your Host What To Say

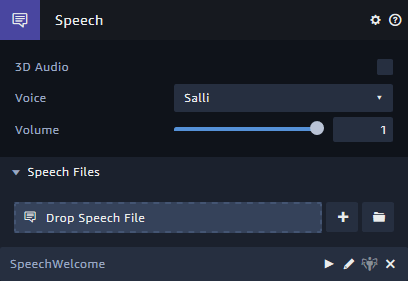

Another unique aspect of the host is its integrated “Speech” component. Here, you select the voice of the host. Amazon provides several different pre-defined female and male voices for you to choose from.

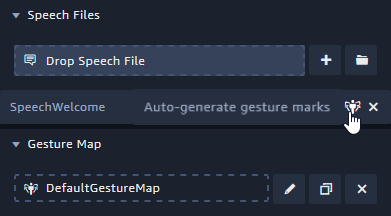

The simplest case is to just add a static speech file within the Sumerian editor. In the “Speech” component, click on the “+” button next to the “Drop Speech File” line.

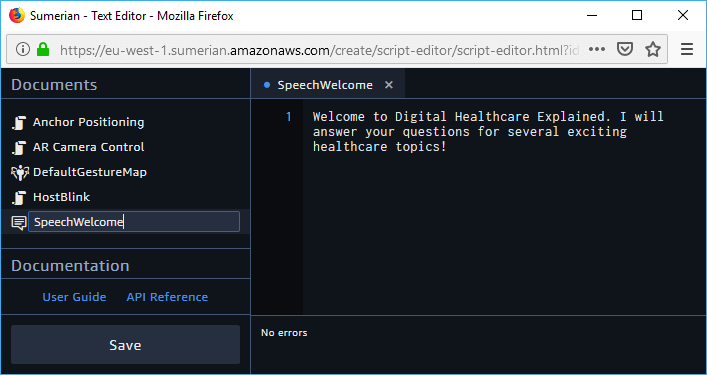

This creates a new speech text file and opens the editor. It’s good practice to always give meaningful names to your assets: click on the file name in the “Documents” section on the left and rename it – for example to “SpeechWelcome”. Then, enter the text to speak in the editor on the right. Click “Save” when you’re finished.

After you edited the speech text, make sure it’s available as speech file in the component. If it isn’t, drag and drop it from the “Assets” panel to the “Speech” component. This makes the speech available for the host; but it doesn’t start speaking yet.

State Machine for Triggering Speech

To actually make your character speak, you need to add a state machine to the host. Within the “State Machine” component of the host, click the “+” button next to “Drop Behavior”. This opens the behavior editor in the bottom pane, below the 3D canvas.

As always, first enter a descriptive name for the behavior. Then, change its name to “HostInteractions” in the details of the behavior. This will make it easier to differentiate multiple behaviors once your scene grows bigger.

For now, let’s configure the scene so that the host starts speaking immediately when the scene finished loading. Click on the pre-defined “State 1” and rename it to “Start Welcome” in the inspector panel.

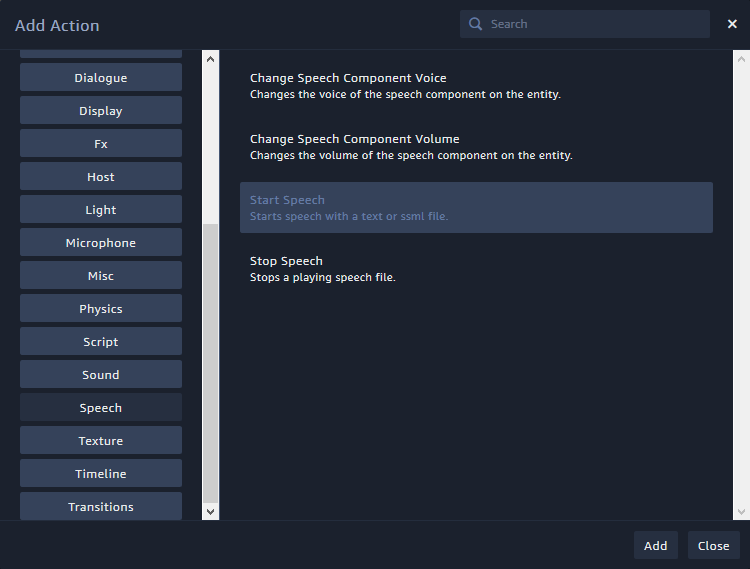

Next, click on “Add Action” and search for “Start Speech”. This adds additional configuration properties to the selected state.

In this area, click on “Select Speech” to choose your previously generated speech text file “SpeechWelcome”. By default, this state is the “Initial State”. Well, it’s the only state, so there is no other choice. This means that this state will be active immediately when your scene starts.

Host Gestures

In the current state the host would still stand still and play it’s “idle” animation. This makes the host move slightly like a normal person would when standing still.

Using the SSML language, gestures are triggered based on certain words in your speech file. For example, you could state that when your character speaks the word “me”, it should point at itself with its hands.

Sumerian supports auto-generating these gesture hints for your speech files, based on “Gesture Maps”. Switch back to the Host. In the “Speech” component, click on the “+” button of the “Gesture Map” section. This auto-generates a gesture map for many common words. You can adapt the “DefaultGestureMap” if you like; but in general, it’s fine to get started. Close the text editor again.

To generate the mark-up for the speech file, now click the “Auto-generate gesture marks” button in the inspector panel of the “Speech” component. Next, open the text file again and you will see the XML added to your speech text file.

An important tag you’ll add manually in several places is a short break between sentences or words. This is done through:

Don’t forget to click “Save” after you made any changes in the text editor!

Coming Up Next: Custom 3D Models & AR Anchors

Now, the host looks at you and speaks pre-defined sentences. In the next part of the article, we’ll extend the scene. First, we will import a custom 3D model. Then, we’ll make sure the user can place both the host and the new model in the real world with one tap on the Augmented Reality-screen.

Amazon Sumerian article series:

- Amazon Sumerian & Augmented Reality (Part 1)

- Speech & Gestures with Amazon Sumerian (Part 2)

- Custom 3D Models & AR Anchors in Amazon Sumerian (Part 3)

- User Interaction & Messages in Amazon Sumerian (Part 4)

- Animation & Timeline for AR with Amazon Sumerian (Part 5)

- Download, Export or Backup Amazon Sumerian Scenes (Part 6)