In the previous parts, we’ve taken a look behind the scenes and manually implemented a depth map with Python and OpenCV. Now, let’s compare the results to Unity’s AR Foundation.

How exactly do depth maps work in ARCore? While Google’s paper describes their approach in detail, their implementation is not open source.

However, Google has released a sample project along with a further paper called DepthLab . It’s directly accessing the ARCore depth API and builds complete sample use-cases on top of them.

DepthLab is available as an open-source Unity app. They use the ARCore SDK for Unity directly and not yet the AR Foundation package.

Depth Maps with AR Foundation in Unity

However, Google recommends using AR Foundation with their own ARCore Extensions module (if needed; currently, they only add Cloud Anchor support). Therefore, let’s take a closer look at how to create depth maps using ARFoundation.

Unity has a large AR Foundation Samples project on GitHub. It demonstrates most available features. Keep in mind that some are not available on all platforms.

As depth maps in Android are a new feature, they’re only supported with AR Foundation 4.1 or later. For this, you should use at least Unity 2020.2.

Additionally, versions before Unity 2020 require manual installation of Gradle for compatibility with Android 11. Starting with August 2021, all apps submitted to the Google Play Store must have Target API Level 30 (= Android 11) set.

Depth Images in the AR Foundation Sample

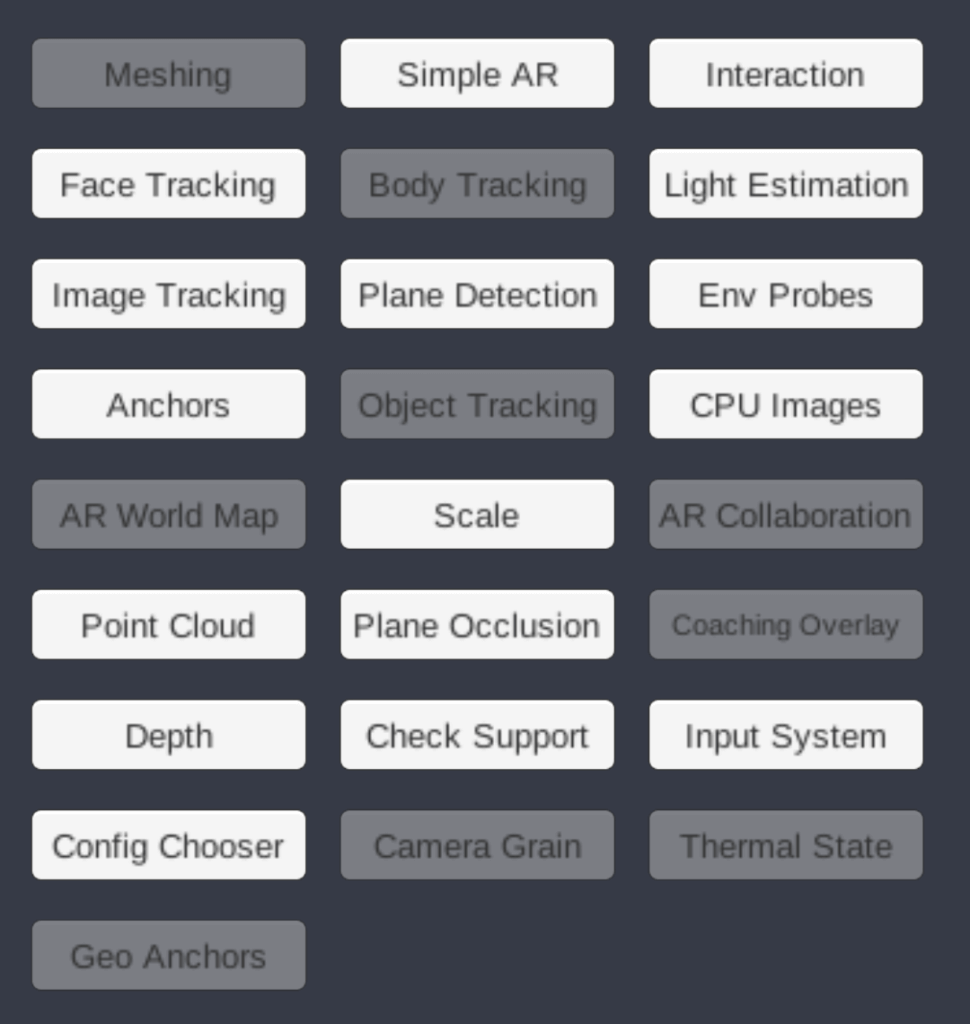

First, let’s see how AR Core through Unity’s AR Foundation handles the same scene that we analyzed before with OpenCV. These are the demo categories available in Unity’s AR sample app:

The demo we’re searching for is in the “Depth” category. Tapping this, you’ll find an occlusion demo as well as the one to show a picture-in-picture depth map. This is what we need!

Depth Images Scene

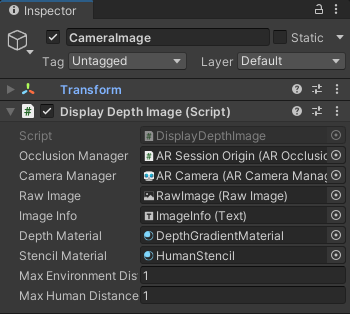

By default, the sample is configured to color-encode the depth map with a maximum distance up to 8 meters. For our small scene, this wouldn’t give us enough visual fidelity to distinguish the small Lego cars. Therefore, open the Depth Images scene in the Unity editor, go to the CameraImage GameObject and change its Max Environment Distance property to 1. The setting is in meters.

Internally, we get the depth map as Unity’s RFloat datatype, which is a 32-bit floating-point value. The sample uses a shader (DepthGradient.shader), which then converts the floating-point depth to an RGB color (through the HSV color model). For this, the shader has two settings: _MinDistance and _MaxDistance.

The default for the minimum distance is 0 and the sample doesn’t make this configurable from the Display Depth Image C# script (would be easy to add, though). The maximum distance is what we can easily change without touching the code, however!

Here is the relevant code snippet from the shader which converts the 32 bit float to RGB (note that the code is released under the Unity Companion License):

AR Foundation Depth Map: Video

Let’s check how the depth map performs! As you can see, the generated depth map is shown in the upper left corner.

Please accept YouTube cookies to play this video. By accepting you will be accessing content from YouTube, a service provided by an external third party.

If you accept this notice, your choice will be saved and the page will refresh.

Compared to our OpenCV example from part 3, you can see that the resulting depth map is a lot smoother and fits better to the real-world contours. This is thanks to the post-processing and filtering of the depth map that ARCore performs .

Additionally, the depth map only requires a slight camera movement until it is generated. This is great as you don’t have to instruct the users to move the phone a lot if you’d like to use depth in your AR app.

In general, the algorithm of course suffers from similar problems as our OpenCV demo. The ground (the green Lego Duplo base plate) still has a very repetitive pattern, making it more difficult to establish the depth. If you look closely, sometimes the algorithm will briefly misjudge the depth of the floor.

Article Series

In the final part, let’s create our own project to visualize depth maps using C#!

- Easily Create Depth Maps with Smartphone AR (Part 1)

- Understand and Apply Stereo Rectification for Depth Maps (Part 2)

- How to Apply Stereo Matching to Generate Depth Maps (Part 3)

- Compare AR Foundation Depth Maps (Part 4)

- Visualize AR Depth Maps in Unity (Part 5)