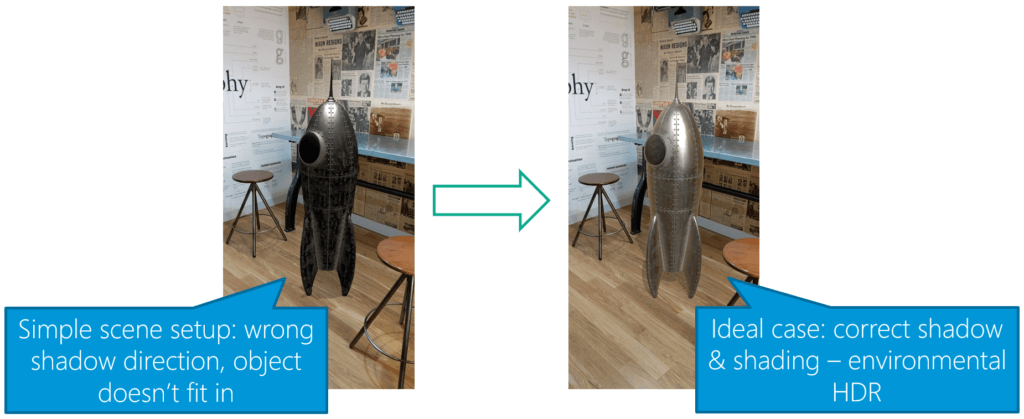

How to make real-time HDR lighting and reflections possible on a smartphone? Based on the unique properties of human perception and the challenges of capturing the world’s state and applying it to virtual objects. Is it still possible?

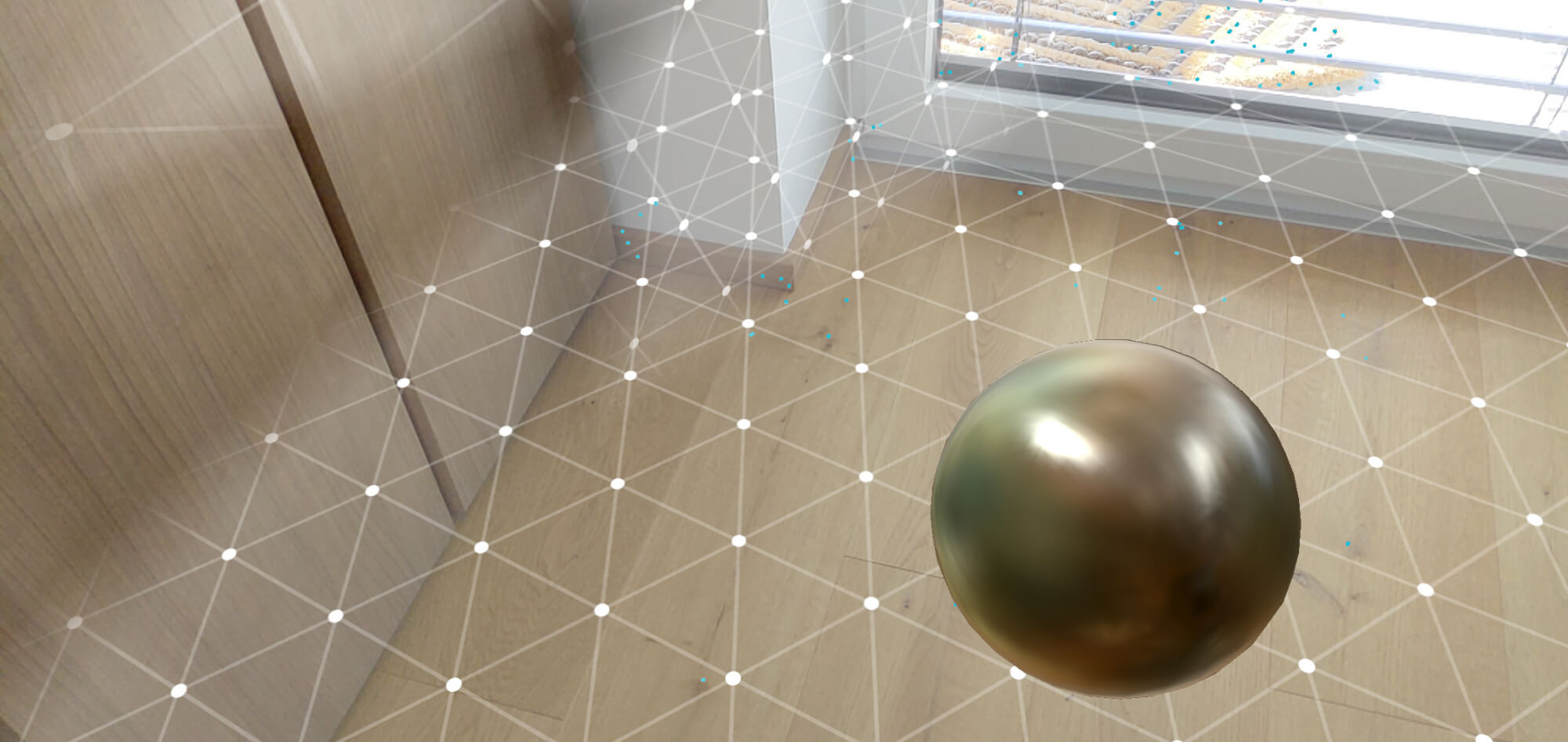

Google found an interesting approach, which is based on using Artificial Intelligence to fill the missing gaps. In this article, we’ll take a look at how ARCore handles this. The practical implementation of this research is available in the ARCore SDK for Unity. Based on this, a short hands-on guide demonstrates how to create a sphere that reflects the real world – even though the smartphone only captures a fraction of it.

Google ARCore Approach to Environmental HDR Lighting

To still make environmental HDR lighting possible in real-time on smartphones, Google uses an innovative approach, which they also published as a scientific paper . Here, I’ll give you a short, high-level overview of their approach:

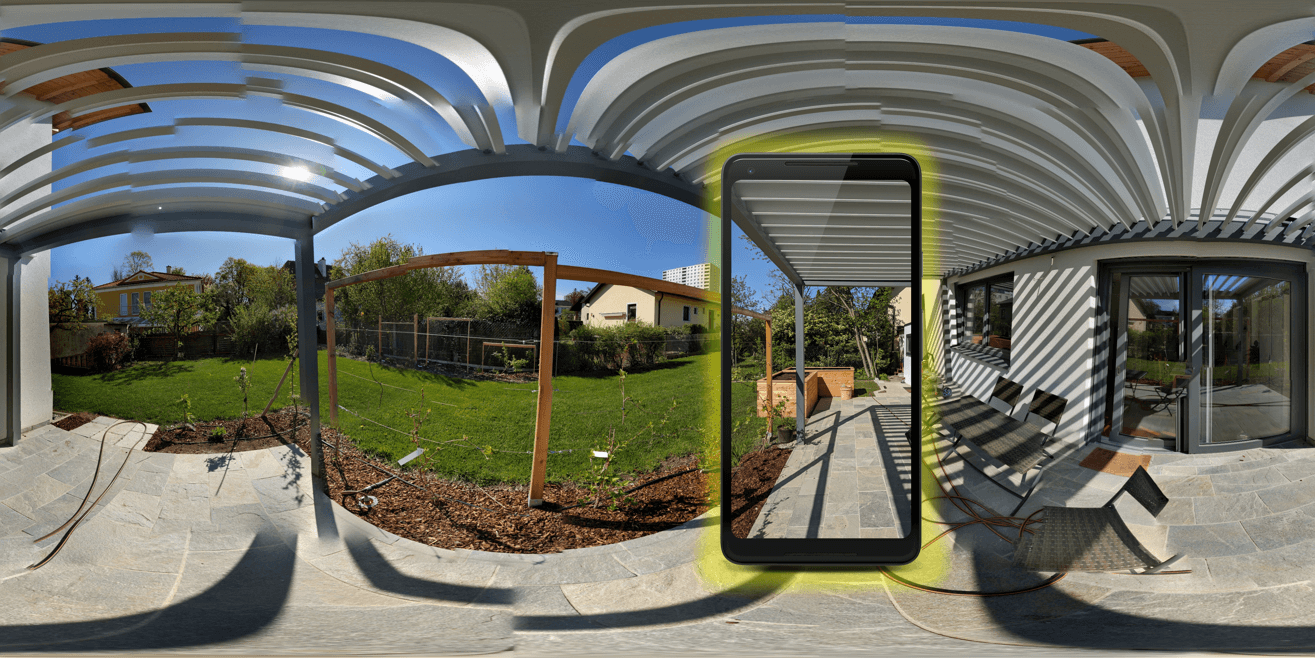

First, Google captured a massive amount of training data. The video feed of the smartphone camera captured both the environment, as well as three different spheres. The setup is shown in the image below.

You must be logged in to post a comment.