In the first part, we took a look at how an algorithm identifies keypoints in camera frames. These are the base for tracking & recognizing the environment.

For Augmented Reality, the device has to know more: its 3D position in the world. It calculates this through the spatial relationship between itself and multiple keypoints. This process is called “Simultaneous Localization and Mapping” – SLAM for short.

Sensors for Perceiving the World

The high-level view: when you first start an AR app using Google ARCore, Apple ARKit or Microsoft Mixed Reality, the system doesn’t know much about the environment. It starts processing data from various sources – mostly the camera. To improve accuracy, the device combines data from other useful sensors like the accelerometer and the gyroscope.

Based on this data, the algorithm has two aims:

- Build a map of the environment

- Locate the device within that environment

For some scenarios, this is easy. If you have the freedom of placing beacons at known locations, you simply need to triangulate the distances and you know exactly where you are.

For other applications, GPS might be good enough.

However, mobile Augmented Reality usually doesn’t have the luxury of known beacons. Neither is GPS accurate enough – especially indoors.

Uncertain Spatial Relationships

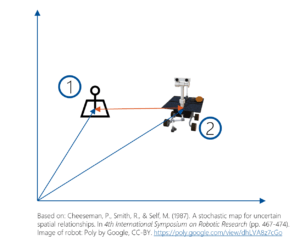

Let’s start with the basics, as described by Cheeseman et al. in “A stochastic map for uncertain spatial relationships” (1987).

In a perfect world, you’d have perfect information about the exact location of everything. This includes the location of a beacon (1), as well as the location of a robot (2).

As a result, you can calculate the exact relationship between the beacon (1) and your own location (2). If you need to move the robot to (3), you can infer exactly where and how you need to move.

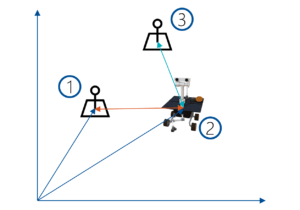

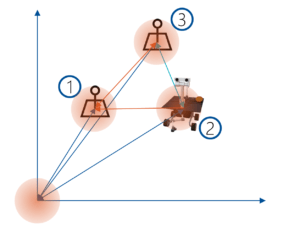

Unfortunately, in the real-life SLAM scenario, you must work with imperfect knowledge. This results in uncertainties.

The points have spatial relationships to each other. As a result, you get a probability distribution of where every position could be. For some points, you might have a higher precision. For others, the uncertainty might be large. Frequently used algorithms to calculate the positions based on uncertainties are the Extended Kalman Filter, Maximum a Posteriori (MAP) estimation or Bundle Adjustment (BA).

Because of the relationships between the points, every new sensor update influences all positions and updates the whole map. Keeping everything up to date requires a significant amount of math.

Aligning the Perceived World

To make Augmented Reality reliable, aligning new measurements with earlier knowledge is one of the most important aspects of SLAM algorithms. Each sensor measurement contains inaccuracies – no matter if they are derived from camera images, or from frame-to-frame movement estimation using accelerometers (odometry).

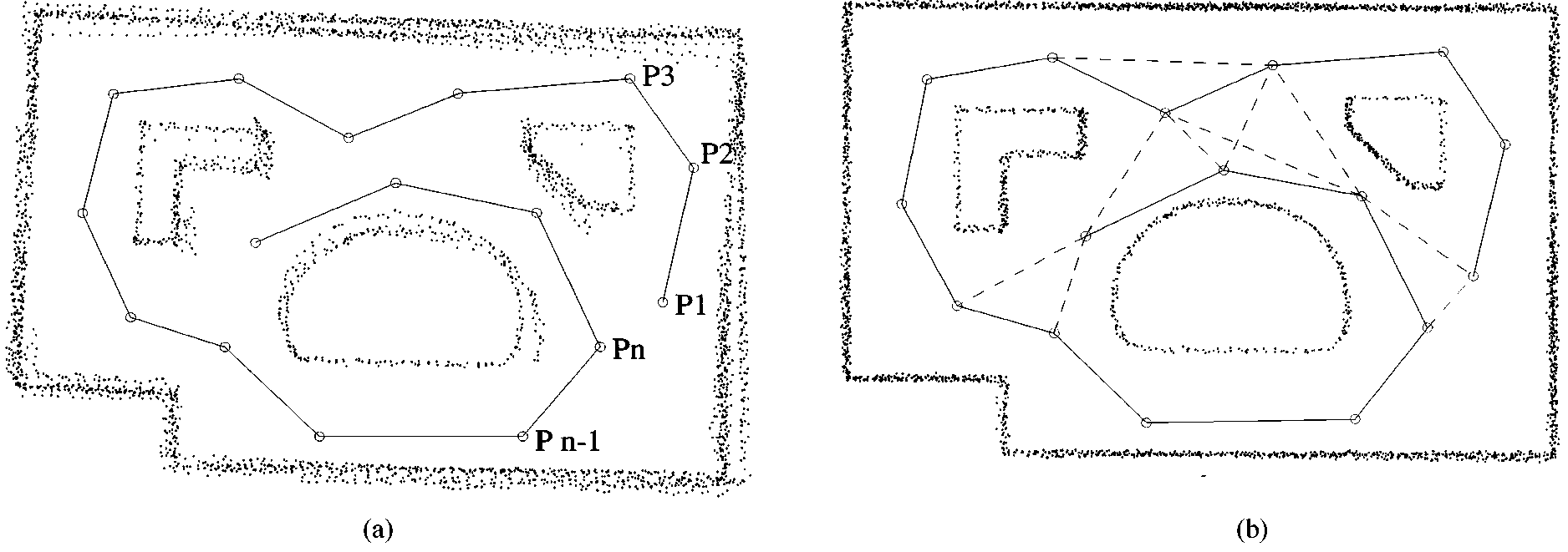

Image credits: Lu, F., & Milios, E. (1997). Globally consistent range scan alignment for environment mapping. Autonomous robots, 4(4), 333-349.

In “Globally consistent range scan alignment for environment mapping” (1997), Lu and Milios describe the basics of the issue. In the figure above, (a) shows how range scan errors accumulate over time. Going from one position P1 … Pn, each little measurement error accumulates over time, until the resulting environment map isn’t consistent anymore.

By aligning the scans in (b) based on networks of relative pose constraints, the resulting match is considerably improved. In the algorithm, they maintain all local frames of data, as well as a network of spatial relations among those.

One of the main questions is: how much history to keep? If comparing every new measurement to all history, complexity would increase to unmanageable levels the longer the application runs. Algorithms therefore often reduce the history to keyframes, and/or further refine the strategy by using a “survival of the fittest” mechanism to later delete data that wasn’t as useful as hoped (as done in ORB-SLAM).

SLAM – Simultaneous Localization and Mapping

To make Augmented Reality work, the SLAM algorithm has to solve the following challenges:

- Unknown space.

- Uncontrolled camera. For current mobile phone-based AR, this is usually only a monocular camera.

- Real-time.

- Drift-free.

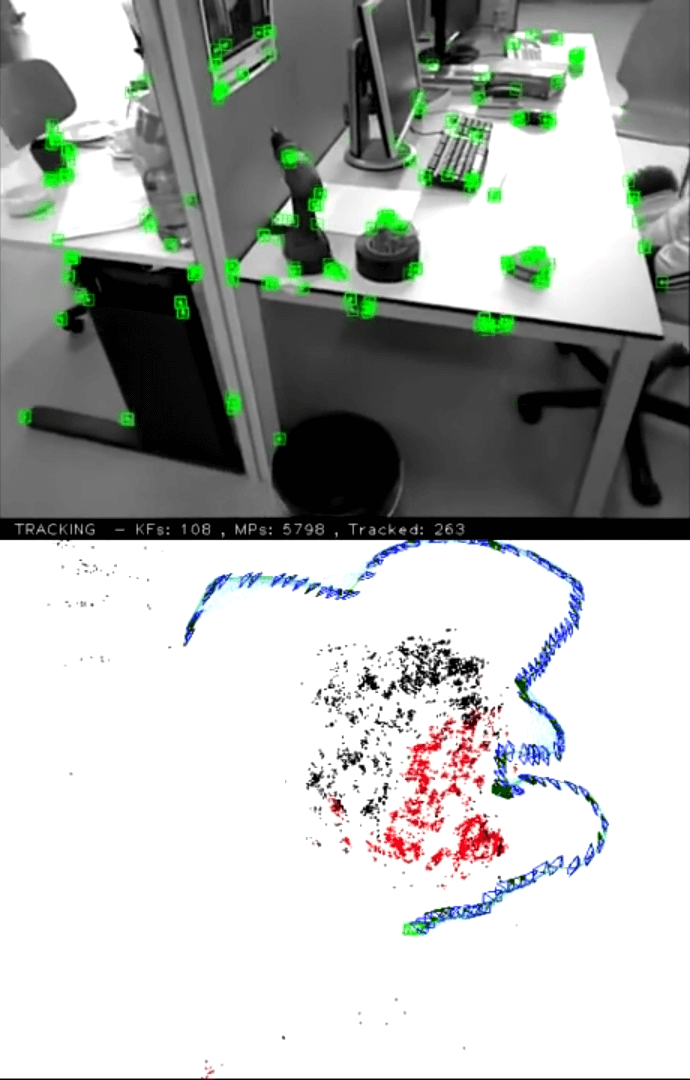

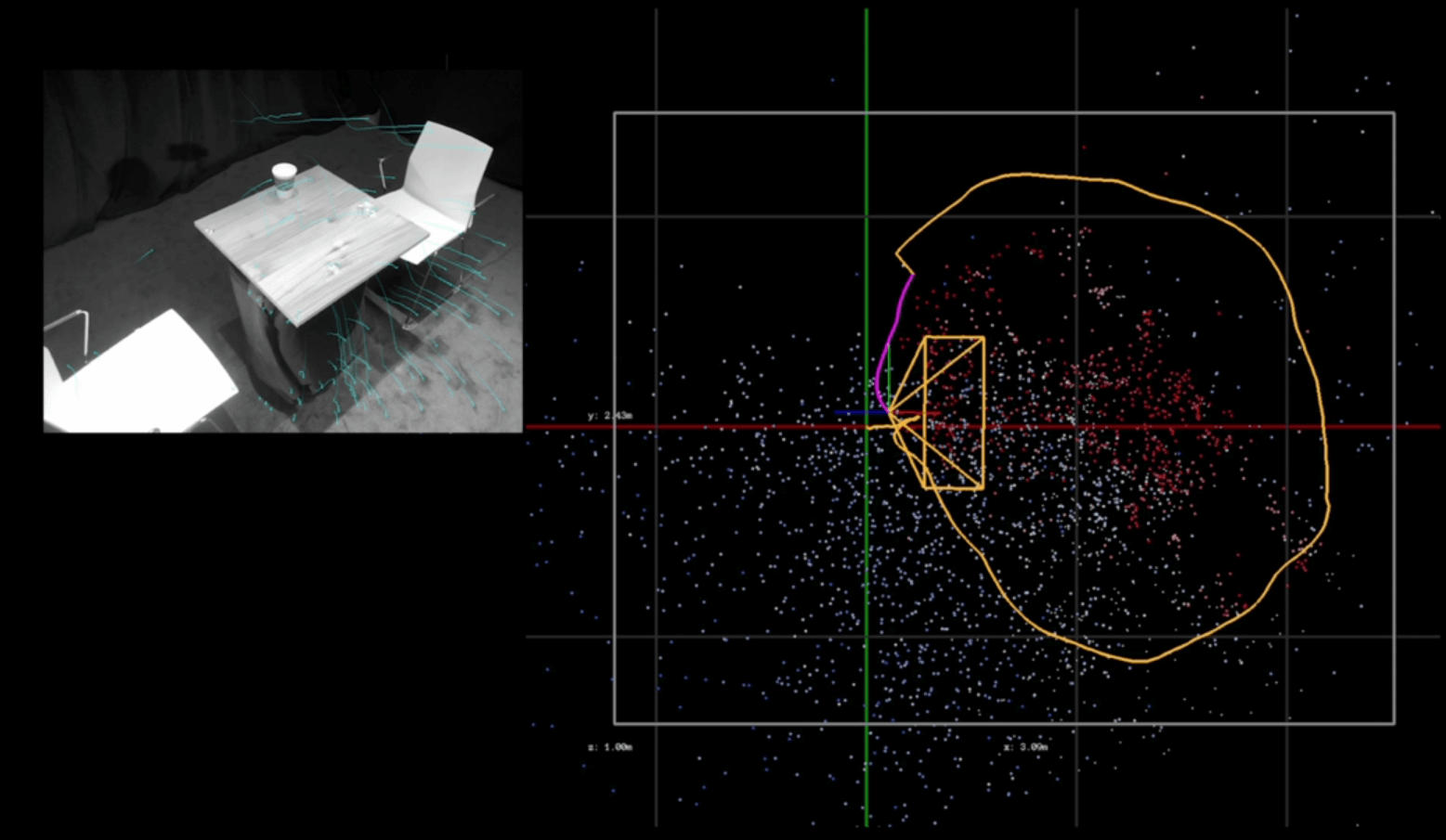

This image from the MonoSLAM algorithm by I. D. Reid et al. (2007) showcases what you want to achieve. Tracked feature points, their relation in space, as well as the inferred camera position.

Image credits: Davison, A. J., Reid, I. D., Molton, N. D., & Stasse, O. (2007). MonoSLAM: Real-time single camera SLAM. IEEE Transactions on Pattern Analysis & Machine Intelligence, (6), 1052-1067.

Anatomy of SLAM

How to apply and solve this in an Augmented Reality scenario?

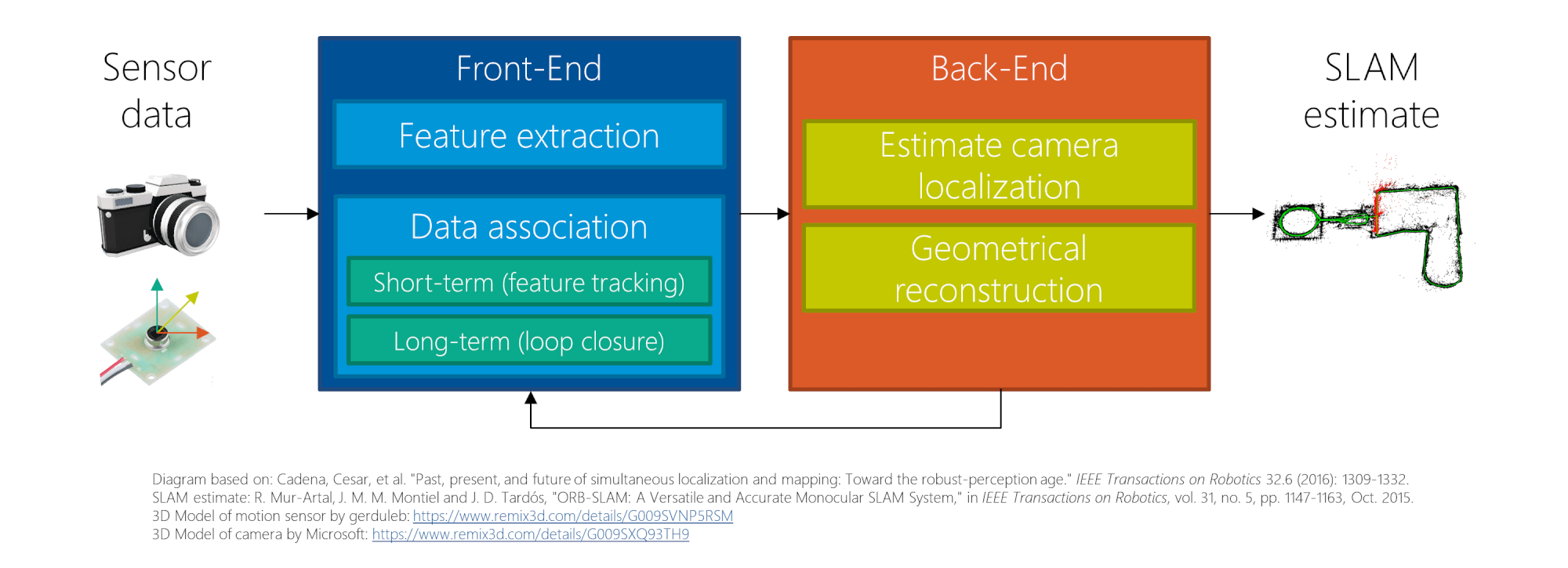

A good starting point for understanding SLAM principles is: “Past, Present, and Future of Simultaneous Localization and Mapping: Towards the Robust-Perception Age” (2016) by Cadena et. al. They describe the typical architecture of SLAM as follows:

The system is made up of 4 parts:

- Sensor data: on mobile devices, this usually includes the camera, accelerometer and gyroscope. It might be augmented by other sensors like GPS, light sensor, depth sensors, etc.

- Front-End: the first step is feature extraction, as described in part 1. These features also need to be associated with landmarks – keypoints with a 3D position, also called map points. In addition, map points need to be tracked in a video stream.

Long-term association reduces drift by recognizing places that have been encountered before (loop closure). - Back-End: takes care of establishing the relationship between different frames, localizing the camera (pose model), as well as handling the overall geometrical reconstruction. Some algorithms create a sparse reconstruction (based on the keypoints). Others try to capture a dense 3D point cloud of the environment.

- SLAM estimate: the result containing the tracked features, their locations & relations, as well as the camera position within the world.

Let’s take a closer look at a concrete SLAM implementation: ORB-SLAM.

Example: ORB-SLAM Algorithm

A very well working and recent algorithm is ORB-SLAM by Mur-Atal, Montiel and Tardós. The successor ORB-SLAM2 adds support for stereo or depth cameras in addition to a monocular system. What’s especially great is that the algorithms are available as open source under the GPL-v3 license. Jeroen Zijllmas described how to run ORB-SLAM on your own computer in a blog post, so I won’t go into details here.

Please accept YouTube cookies to play this video. By accepting you will be accessing content from YouTube, a service provided by an external third party.

If you accept this notice, your choice will be saved and the page will refresh.

ORB-SLAM is a visual algorithm, so doesn’t use odometry by accelerometers and gyroscopes. Considering that the algorithm still works great, the results are impressive.

Feature Choice

As described in part 1, many algorithms have the mission to find keypoints and to generate descriptors. The traditional SIFT and SURF algorithms are always used as reference, but are generally too slow for real-time use.

As the name implies, the ORB-SLAM algorithm relies on the ORB feature tracking algorithm instead. ORB is based on the same underlying methods for finding keypoints and generating descriptors as the BRISK algorithm from part 1, so I won’t go into detail.

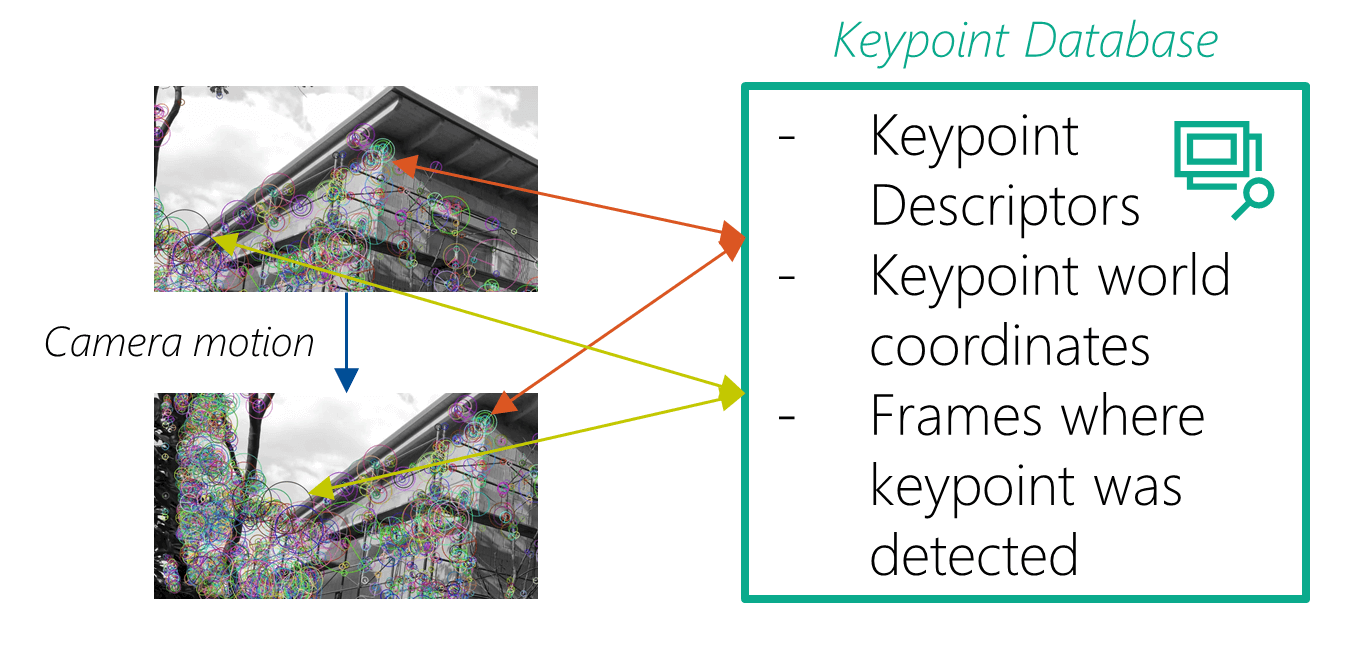

In general, ORB-SLAM analyzes each frame for keypoints. These are then stored in a map, together with references to keyframes where these keypoints have been detected. This association is important; it’s used to match future frames and to refine the previously stored data.

Converting Keypoints to 3D Landmarks

One of the most interesting parts of SLAM is how keypoints found in 2D camera frames actually get 3D coordinates (then called “map points” or “landmarks”). In ORB-SLAM, a big part of this happens in LocalMapping::CreateNewMapPoints() (line 205).

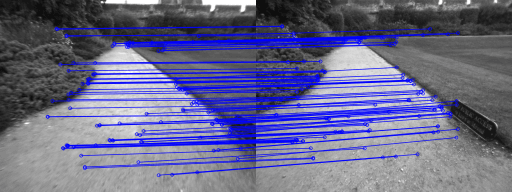

Image credits: ORB-SLAM video by Raúl Mur Artal, https://www.youtube.com/watch?v=_9VcvGybsDA

Whenever the algorithm gets a new frame from the camera, it first performs keypoint detection. These keypoints are then matched to the previous camera frame. The camera motion so far provides a good idea on where to find the same keypoints again in the new frame; this helps with the real-time requirement. The matching results in an initial camera pose estimation.

Next, ORB-SLAM tries to improve the estimated camera pose. The algorithm projects its map into the new camera frame, to search for more keypoint correspondences. If it’s certain enough that the keypoints match, it uses the additional data to refine the camera pose.

New map points are created by triangulating matching keypoints from connected frames. The triangulation is based on the 2D position of the keypoint in the frames, as well as the translation and rotation between the frames as a whole. Initially, the match is calculated between two frames – but it can later be extended to additional frames.

Loop Detection and Loop Closing

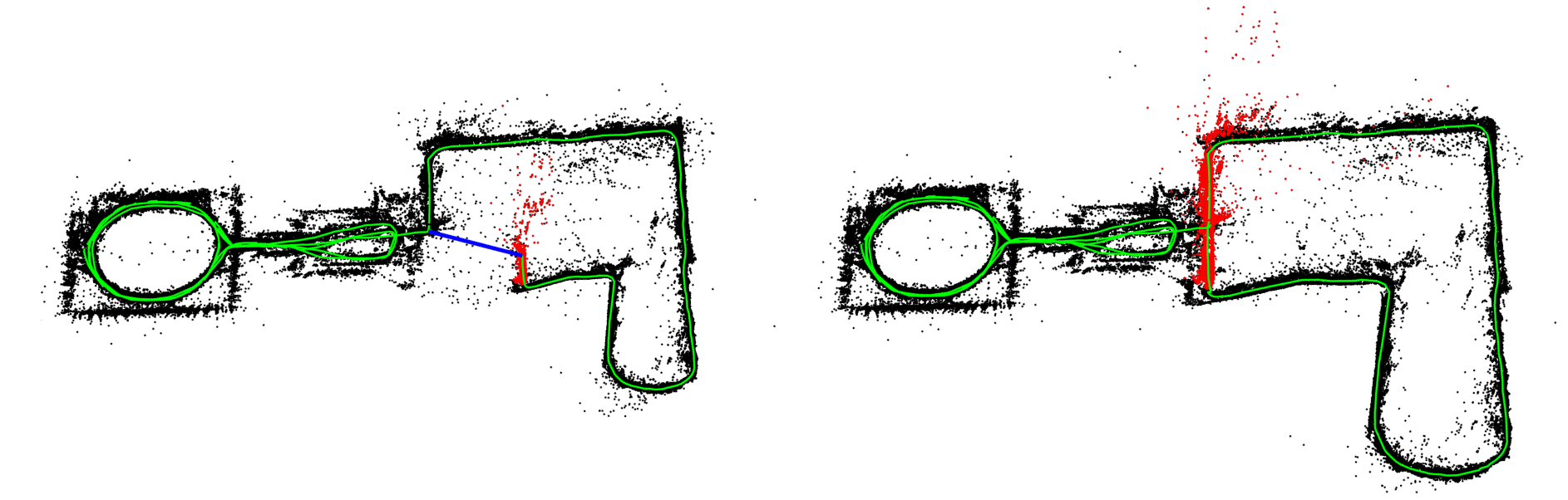

Image credits: Mur-Artal, R., Montiel, J. M. M., & Tardos, J. D. (2015). ORB-SLAM: a versatile and accurate monocular SLAM system. IEEE Transactions on Robotics, 31(5), 1147-1163.

Another key step in a SLAM algorithm is loop detection and loop closing: ORB-SLAM checks if keypoints in a frame match with previously detected keypoints from a different location. If the similarity exceeds a threshold, the algorithm knows that the user returned to a known place; but inaccuracies on the way might have introduced an offset.

By propagating the coordinate correction across the whole graph from the current location to the previous place, the map is updated with the new knowledge.

Image credits: Mur-Artal, R., Montiel, J. M. M., & Tardos, J. D. (2015). ORB-SLAM: a versatile and accurate monocular SLAM system. IEEE Transactions on Robotics, 31(5), 1147-1163.

SLAM & Google + Microsoft + Apple?

Quick reality check: what’s used in today’s mobile AR? All fuse data of inertial sensors together with the camera feed.

- Google: In the documentation, Google describes that ARCore is using a process called concurrent odometry and mapping – which is essentially just another name of the broader term SLAM. The name also indicates that they’re integrating inertial sensors for odometry. The general architecture is described in a patent. The Wikipedia article on SLAM mentions that Google is using similar Bundle Adjustment / Maximum a Posteriori (MAP) estimation as described before.

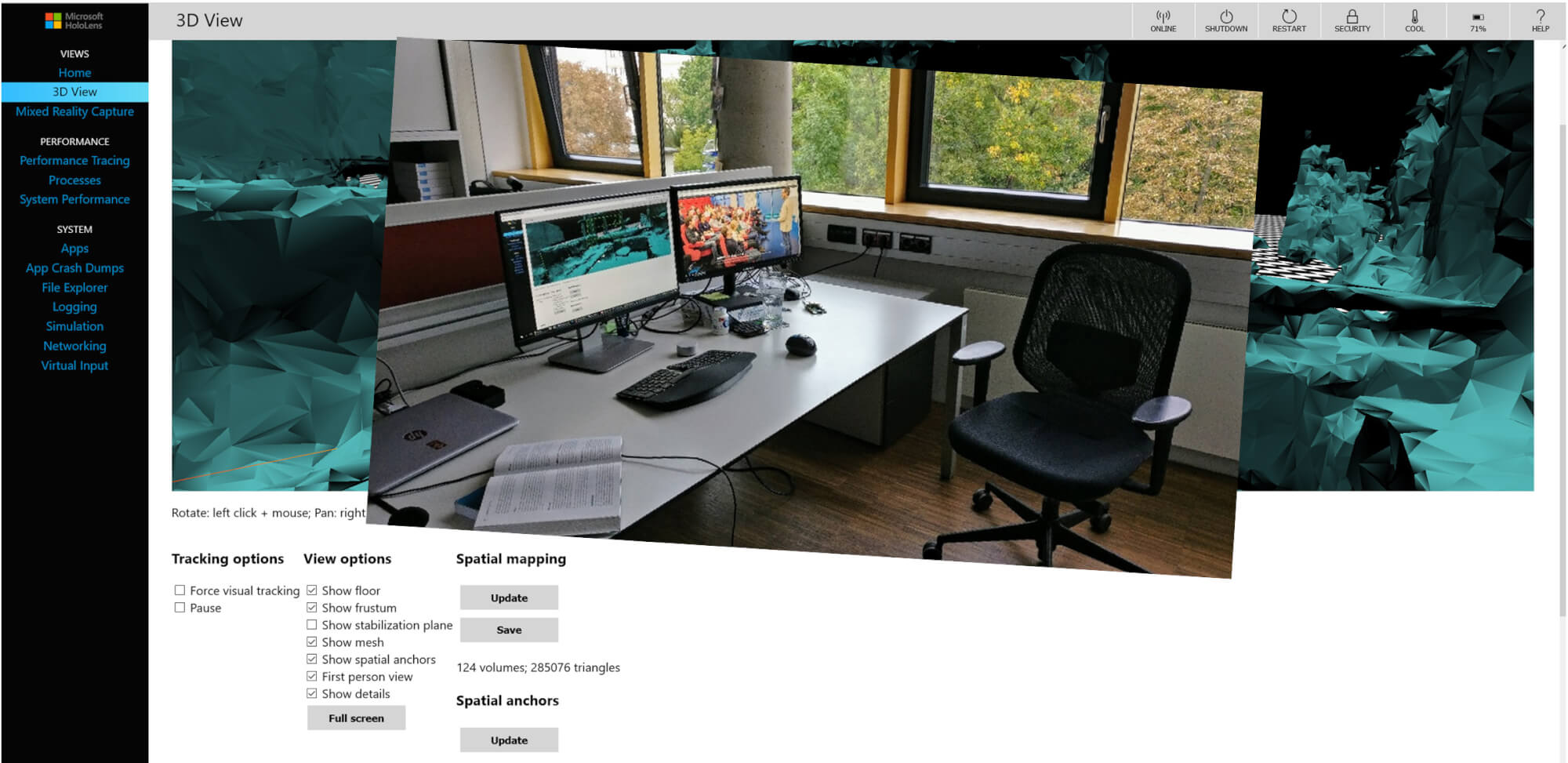

- Microsoft: much of what’s happening in HoloLens and Windows Mixed Reality is based on prior research done for Kinect. As such, patents from Microsoft related to SLAM have been released many years ago. Additionally, the new Research Mode of the HoloLens allows accessing the results of the SLAM algorithms performed. Microsoft provides some examples to get started on GitHub – including OpenCV integration.

- Apple: several years ago, Apple acquired Metaio and FlyBy, who developed SLAM algorithms. Apple is using Visual Inertial Odometry / SLAM – so they also combine the camera with other phone sensors to improve precision. A session video from WWDC 2018 provides a great overview of the technology.

Image credits: Apple, https://developer.apple.com/videos/play/wwdc2018/610/

The Future of Phone-Based Augmented Reality

SIFT algorithms are valuable for many research topics, including autonomous car navigation. Therefore, improving and extending quality & performance is a very active research topic.

In “A Comparative Analysis of Tightly-coupled Monocular, Binocular, and Stereo VINS” (2017), Paul et al. compare algorithms that combine data from inertial measurement units (IMUs) with optical sensors, as is also done by today’s big commercial AR frameworks. Among others, they also compare their new algorithm to the state-of-the-art ORB-SLAM2 and OKVIS algorithms and found that they managed to improve reliability and speed even further.

In addition, attempts to bring semantic meaning into SLAM algorithms also shows promise, as demonstrated by “Semantic Visual Localization” (2018) from Schönberger, Johannes L., et al. just a few weeks ago.

Of course, AR systems generally try to understand more and more about the environment. While ARKit and ARCore started with tracking simple planes, HoloLens already tries to infer more knowledge through Spatial Understanding. Apple introduced 3D object tracking with ARKit 2. So, we will see a greatly improved integration between virtual objects and real environments.

With these promising results and latest advances in research, we can expect Augmented Reality on mobile phones to further improve a lot in the coming months and years!