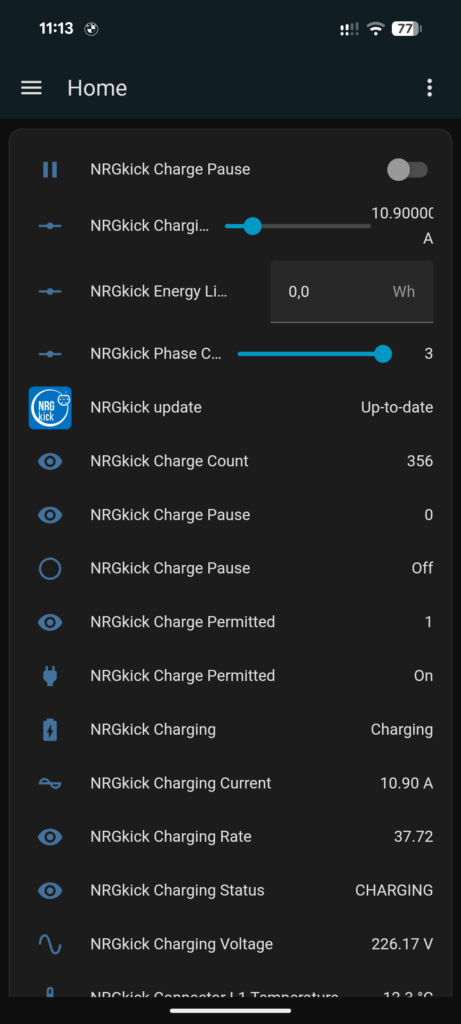

Having an NRGkick mobile wallbox from the Austrian company DiniTech, I decided to integrate it into a new project. The wallbox has a local API (so controlling it does not need the internet) and exposes over 80 sensors / data points. This includes basics like charging current and total energy, and even the individual temperatures of the charging phase connectors. This is a perfect playground for smart home integration.

My main goal was not just to visualize this data in Home Assistant. I wanted to control the rest of the home based on the wallbox’s charging status. This is useful for a home with a photovoltaic (PV) system that is not big enough to support full car charging and other large appliances (like heating) at the same time. Controlling the wallbox itself (setting current, pausing) was also an important benefit, especially if you don’t have the PV-led charging add-on for the wallbox.

This project was also an experiment to test the current state of AI coding agents. I wanted to see if they could build a full, high-quality Home Assistant integration from scratch. I used GitHub Copilot inside Visual Studio Code and tested several different models.

You can download and try the full integration from GitHub, and it’s also available for direct installation into your Home Assistant setup through the Home Assistant Community Store (HACS) – search for “NRGkick”; the actual configuration then works through auto-discovery in your local network. Also visit the Home Assistant Community forum discussion if you have feedback or comments!

The First Result

My first test was with Claude Sonnet 4.5 (released 29. September 2025). I gave it one prompt referencing the local API specification (formatted as a HTML page) and my goal. The result was surprisingly good. It produced a working prototype of the Home Assistant integration from that first prompt. It structured the code, handled the API connection, and created basic entities. I did not expect this to actually work so quickly!

But a working prototype is not a finished product. A feature-complete, well-documented integration that meets Home Assistant’s quality standards is much more work.

The Reality: From 1 to 316 Prompts

That first prompt was only the start. To get the integration to its final, robust state took 316 agent prompts (according to the GitHub Copilot Usage stats). Based on the GitHub Copilot premium requests analytics, this would have resulted in costs of a little less than €10 (if not included in the Copilot plan).

Here is the breakdown of the prompts:

- Claude Sonnet 4.5: 253 prompts.

- Gemini 2.5 Pro: 24 prompts.

- GPT 5 Codex: 12 prompts.

- GPT-5 (General Chat): The remaining 27 prompts were for general conversations about the code, not in a direct agent mode.

This is the key insight: developing with AI coding agents is not a “fire-and-forget” process. Each prompt meant the agent worked for several minutes – writing code, running tests, fixing errors, and updating documentation.

You cannot just sit back. You must read the code it produces, the agent’s thoughts, actively guide the agent, find bugs it misses, and correct its direction. The workflow shifts from writing code to directing and reviewing code.

My Experience with the AI Coding Agents

I used this journey to test the different models for the job. Here is my personal breakdown:

1. Claude Sonnet 4.5 (253 Prompts)

This was my favorite model for this task.

- Pro: The interaction is very natural. It is proactive, explains what it is doing, and gives good reasoning. It listens to corrections and uses them well. It was reasonably good at keeping the “big picture” in mind for the project’s logic.

- Con: It suffered the most from using outdated dependencies or not sticking to the latest coding standards. More on that later.

2. Google Gemini 2.5 Pro (24 Prompts)

- Pro: It was fast and sometimes had more “creative” ideas or solutions.

- Con: It was often not reliable. It even made simple syntax errors, but also more complex errors where calling a changed function failed or unit tests were broken. It was not good at keeping the big picture in mind, even when told in the copilot instructions.

3. GPT 5 Codex (in VS Code) (12 Prompts)

This model (in preview) felt like a “thinking senior model.” I did not use it enough to judge its handling of dependencies or the “big picture.”

- Pro: It took a long time to think, but its answers were often good.

- Con: You have to force it to do work as an agent. Its default behavior is to analyze the problem and give a summary. You must then explicitly write “Okay, now please do it.” It is not proactive like Claude, and doesn’t keep you up-to-date as much about what it is doing and why.

Development Workflow: AI Agents vs. Human

A big difference I found was the development process. When I code manually, I implement a small feature, test it, and then go to the next. I build iteratively.

AI coding agents go “all-in.” Based on the specifications, they immediately complete large portions of the code. This is fast, but it means you must take the time to manually test every single feature (instead of falling into the trap of believing that it looks fine and therefore works).

I found two examples of this:

- Wrong API Request: The agent implemented all API interactions. Reading all 80+ sensors worked fine. The controls for setting controls were also coded. However, the agent used the wrong name for the API request parameter, so none of the controls actually worked. The agent wrote unit tests that passed, but it would never have found this issue without manual testing against the real wallbox.

- Undefined Error Handling: The API specification did not detail what the API returns in case of an error (e.g., wallbox can’t charge because the car is full). The agents just assumed a return value, without mentioning this uncertainty. Again, the model’s self-written unit tests passed, but the underlying assumption was wrong. I had to manually call the low-level API to analyze the real structure of both success and error values to guide the AI agent.

Code Quality and Tooling

This project uses many automatic tools to ensure code quality, including pre-commit checks. This is even more important when an AI writes the code. In addition to locally running validation checks before committing and pushing, GitHub Actions had 192 workflow runs so far, resulting in close to 700 minutes of cloud-based code testing on Linux. For open source projects, this is free (thanks Github!); otherwise, it would have amounted for around $6.

My checks (for this Python project) include:

- Code formatting with

black - Linting with

flake8andpylint - Import sorting through

isort - Type checking with

mypy - Cleanup of other files with

prettier - Home Assistant-specific validations:

Hassfest(Home Assistant validation tool)HACS Action(checking for compliance with Home Assistant Community Store guidelines)

Even when code structure and the settings are mentioned in the copilot instructions, the generated code often does not comply. This resulted in semi-automated correction rounds after the agent was active.

This is a key difference: as a human, I learn the code structure and development policies over time and stick to them. The AI agent sees the project “from scratch” each time and repeats the same mistakes again and again.

A real danger is that agents will try to add rules to ignore warnings from code quality tools. Several times, the agent classified a warning as “not relevant” and just added an ignore rule. I had to remove several and convince the model to fix the issue instead of ignoring it.

This also applies to dependencies. Both Claude and Gemini frequently used old software patterns or outdated dependencies. This is a general problem with the models’ knowledge, which doesn’t have an easy fix.

Overall Learning

This was a big learning experience, not only about AI agents, but also about the code.

The AI coding agents introduced many interesting concepts to the code which I did not know before. It was much quicker to get a working prototype than I would have been when coding it myself.

The learning process is just different. Instead of learning step-by-step from an empty plate, you go “all-in” with a complete project. Then you dive into the details as you start to dissect what the agent coded, review what was good, and find what still needs improvement.

Final Thoughts About AI Coding Agents

So, what is the conclusion? AI coding agents are powerful, but they are not magic. They are tools. They do not replace the developer (yet?).

Instead, the developer’s job changes. You become more of an architect and a careful reviewer. This requires more experience, making it harder for junior developers to learn & grow. E.g., you need strategies how to figure out what the underlying issue could be in case the AI agent is stuck.

For this project, Claude Sonnet 4.5 was the clear winner. Its collaborative style was the most effective, even with its outdated code problem.

The integration is now fully functional, and I can manage my EV charging from my Home Assistant dashboard – all thanks to a (very) long chat with an AI. If you have the same NRGkick mobile wallbox, you’re welcome to install the integration – find the full code and release versions for easy installation at GitHub.

You must be logged in to post a comment.